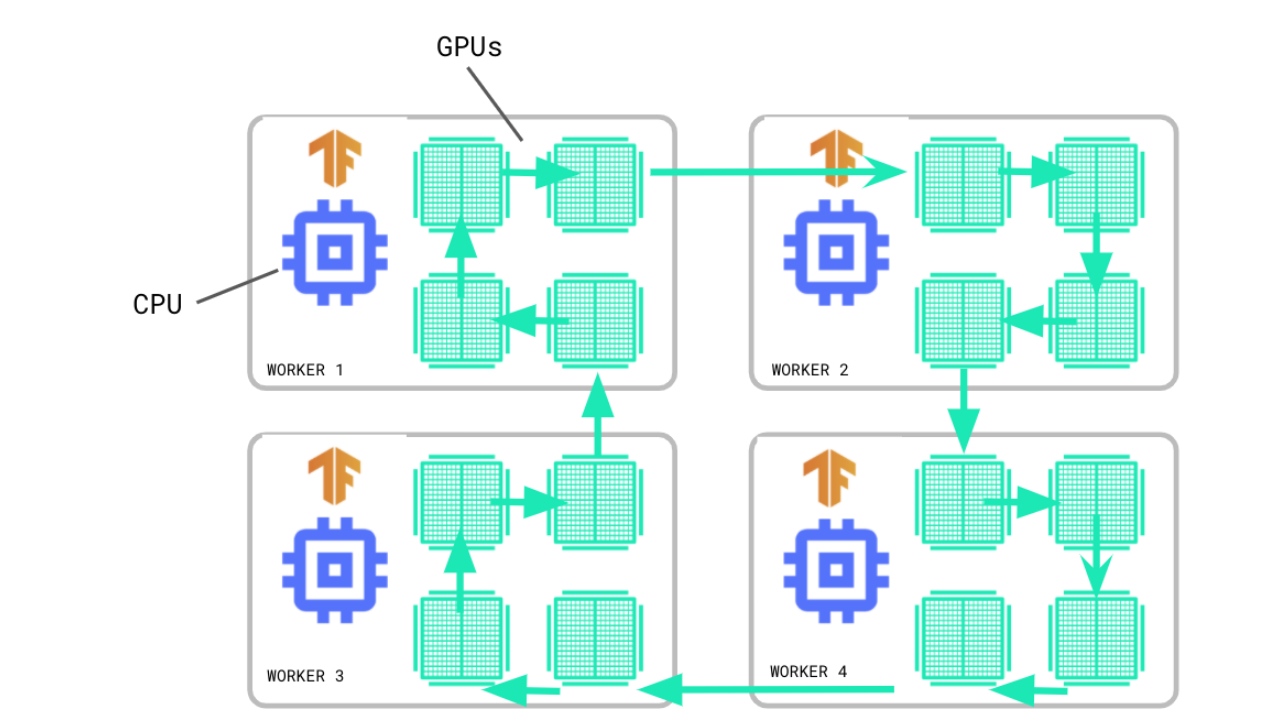

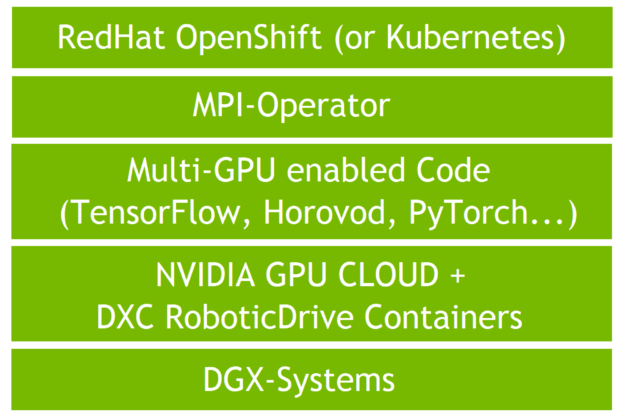

Validating Distributed Multi-Node Autonomous Vehicle AI Training with NVIDIA DGX Systems on OpenShift with DXC Robotic Drive | NVIDIA Technical Blog

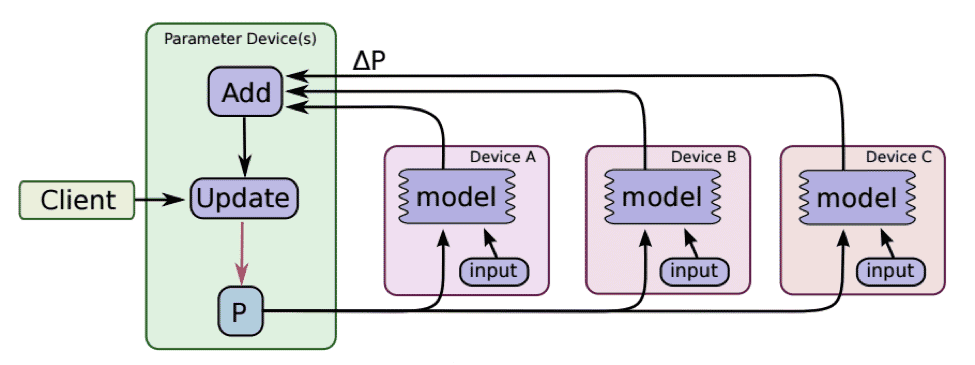

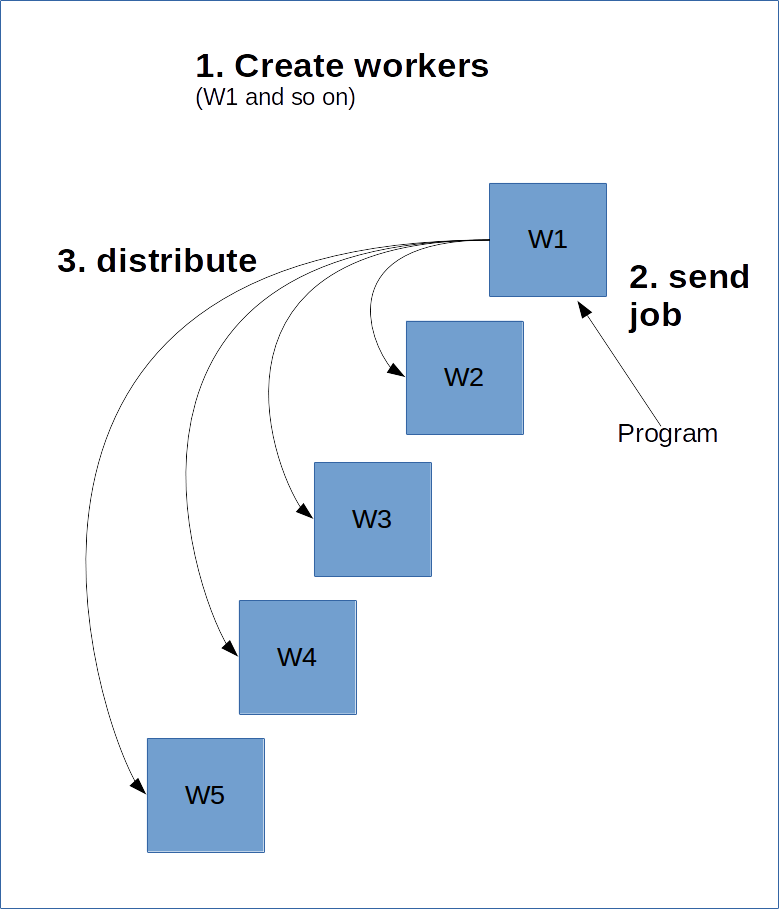

Launching TensorFlow distributed training easily with Horovod or Parameter Servers in Amazon SageMaker | AWS Machine Learning Blog

TensorFlow as a Distributed Virtual Machine - Open Data Science - Your News Source for AI, Machine Learning & more

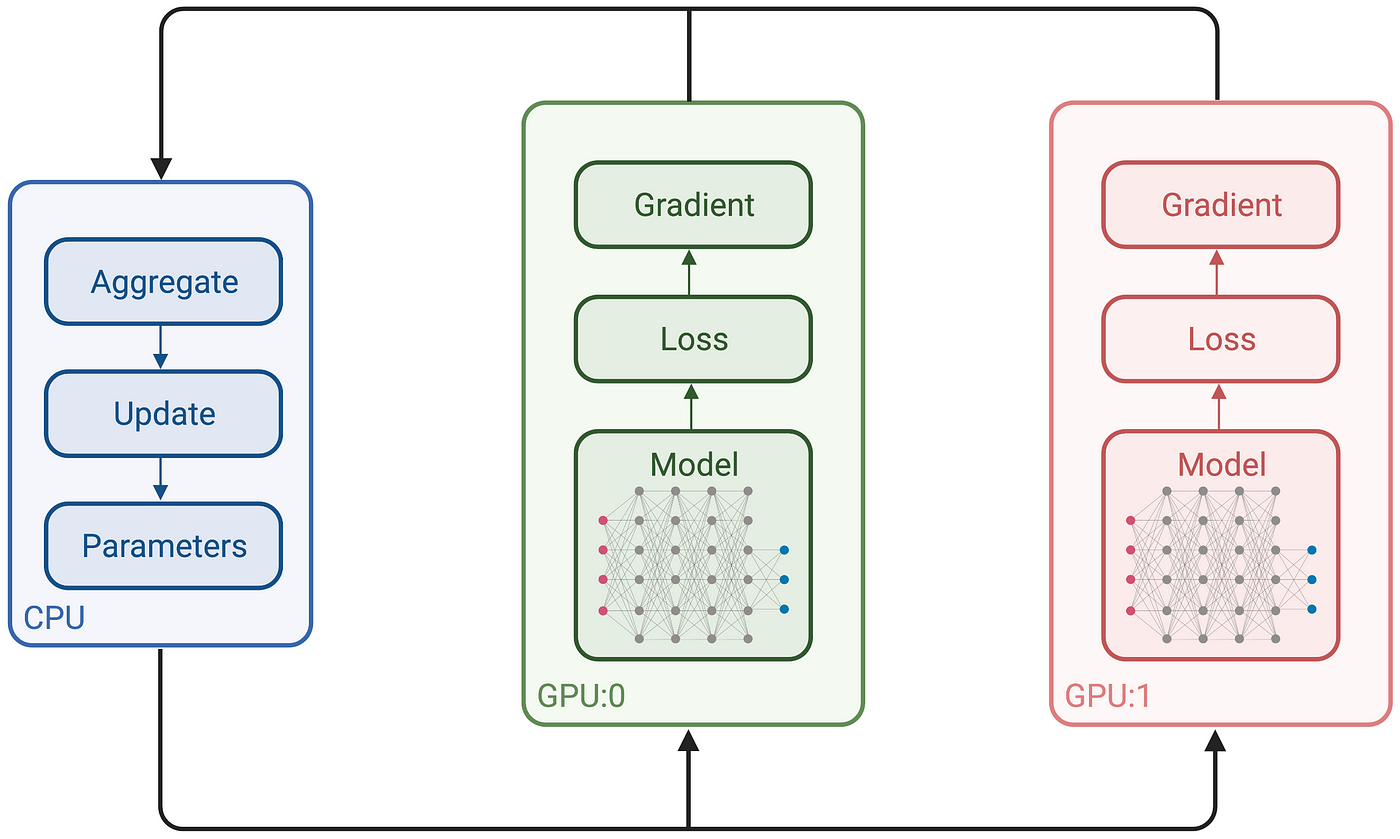

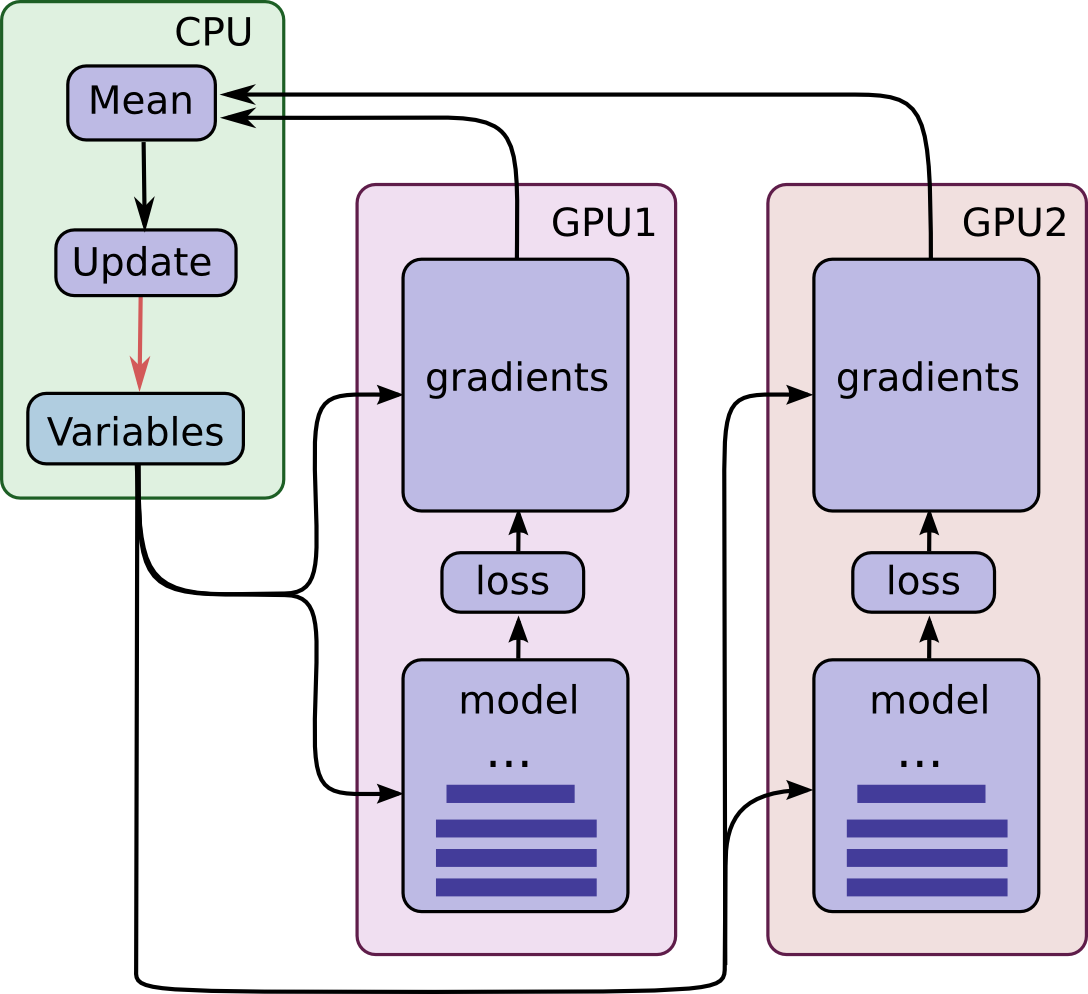

GitHub - sayakpaul/tf.keras-Distributed-Training: Shows how to use MirroredStrategy to distribute training workloads when using the regular fit and compile paradigm in tf.keras.