Accelerating Sequential Python User-Defined Functions with RAPIDS on GPUs for 100X Speedups | NVIDIA Technical Blog

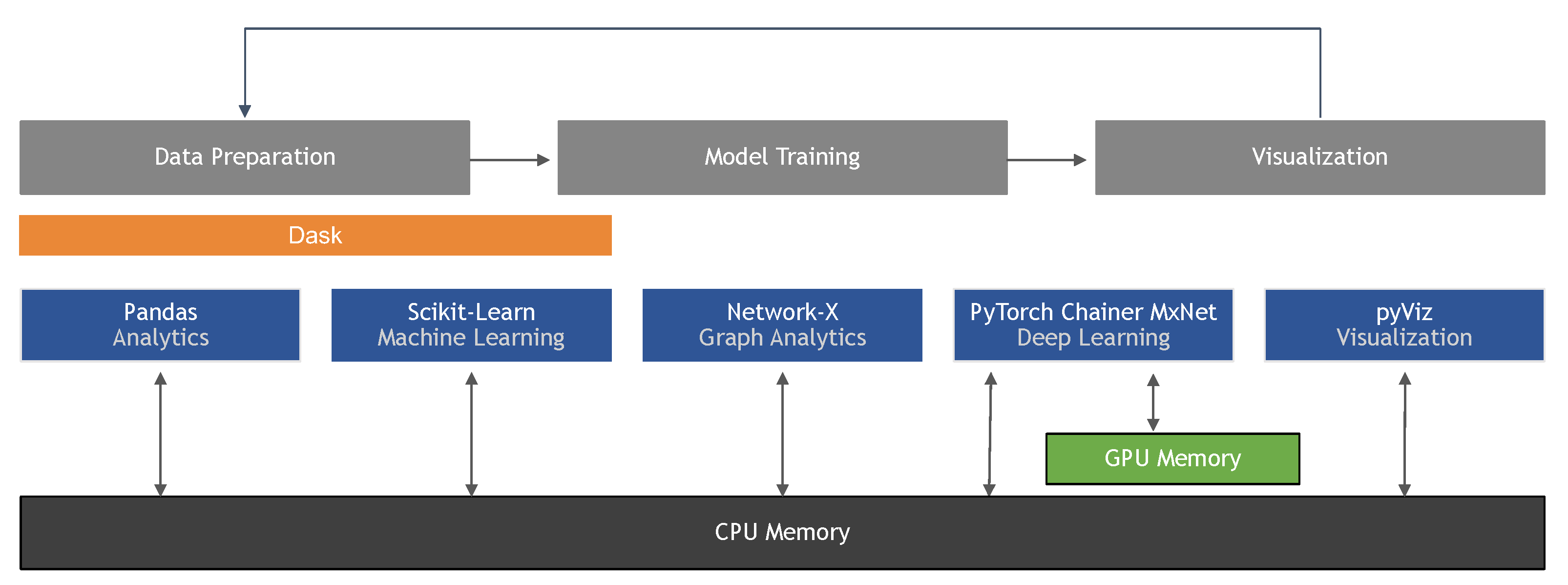

Productive and Efficient Data Science with Python: With Modularizing, Memory profiles, and Parallel/GPU Processing : Sarkar, Tirthajyoti: Amazon.in: Books

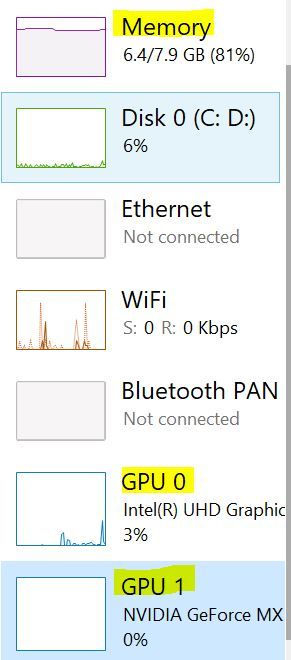

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

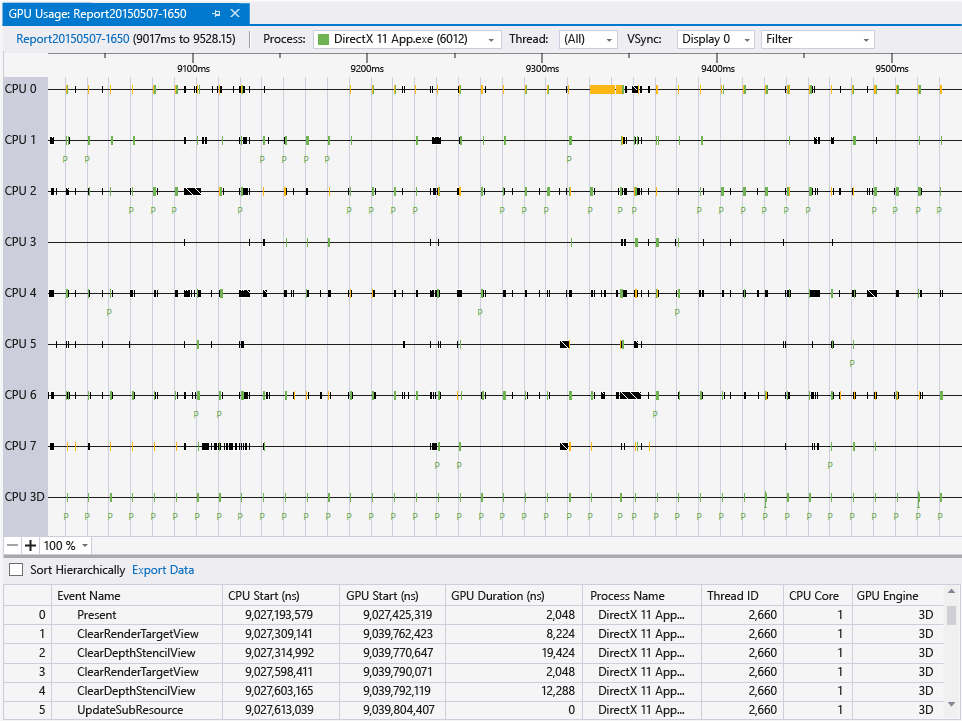

How We Boosted Video Processing Speed 5x by Optimizing GPU Usage in Python | by Lightricks Tech Blog | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium