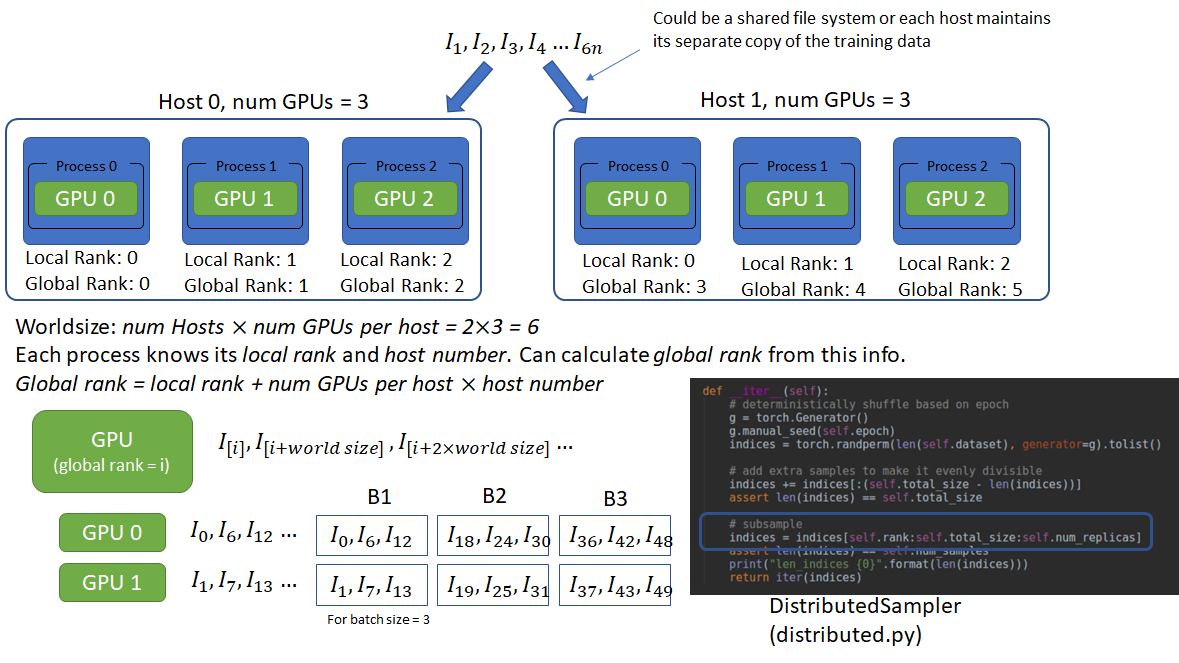

Training Memory-Intensive Deep Learning Models with PyTorch's Distributed Data Parallel | Naga's Blog

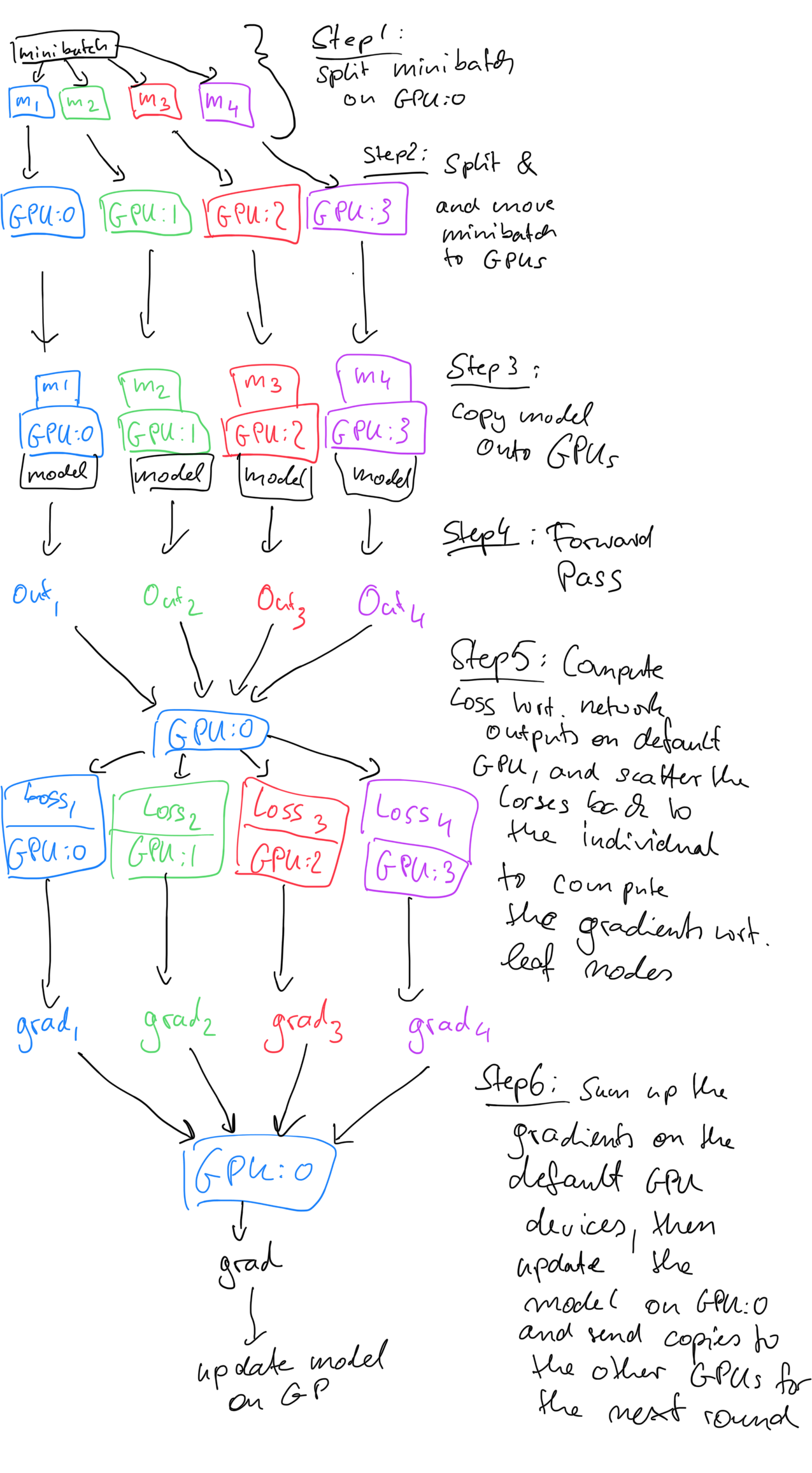

Getting Started with Fully Sharded Data Parallel(FSDP) — PyTorch Tutorials 2.0.1+cu117 documentation

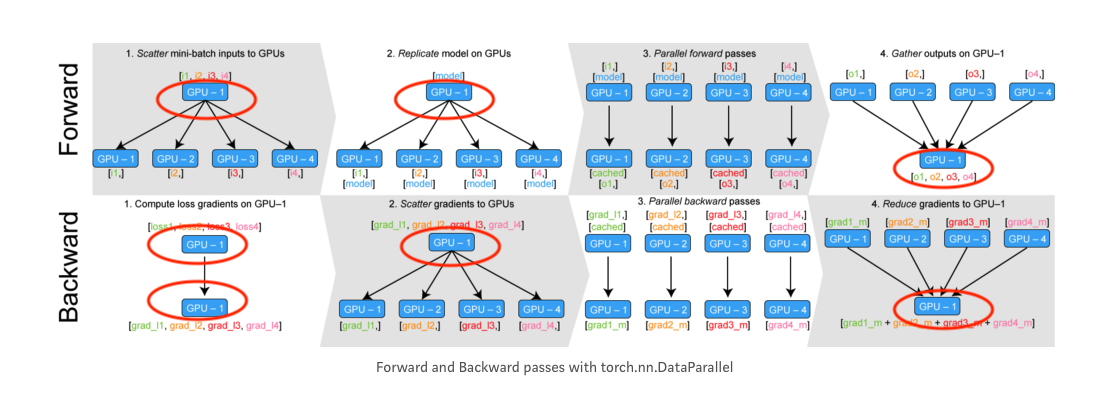

Training Memory-Intensive Deep Learning Models with PyTorch's Distributed Data Parallel | Naga's Blog

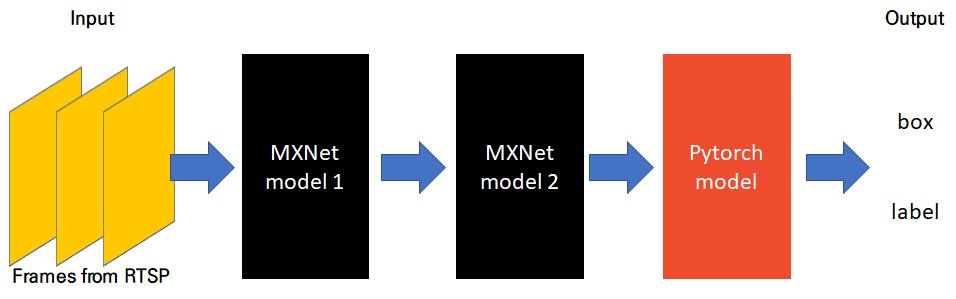

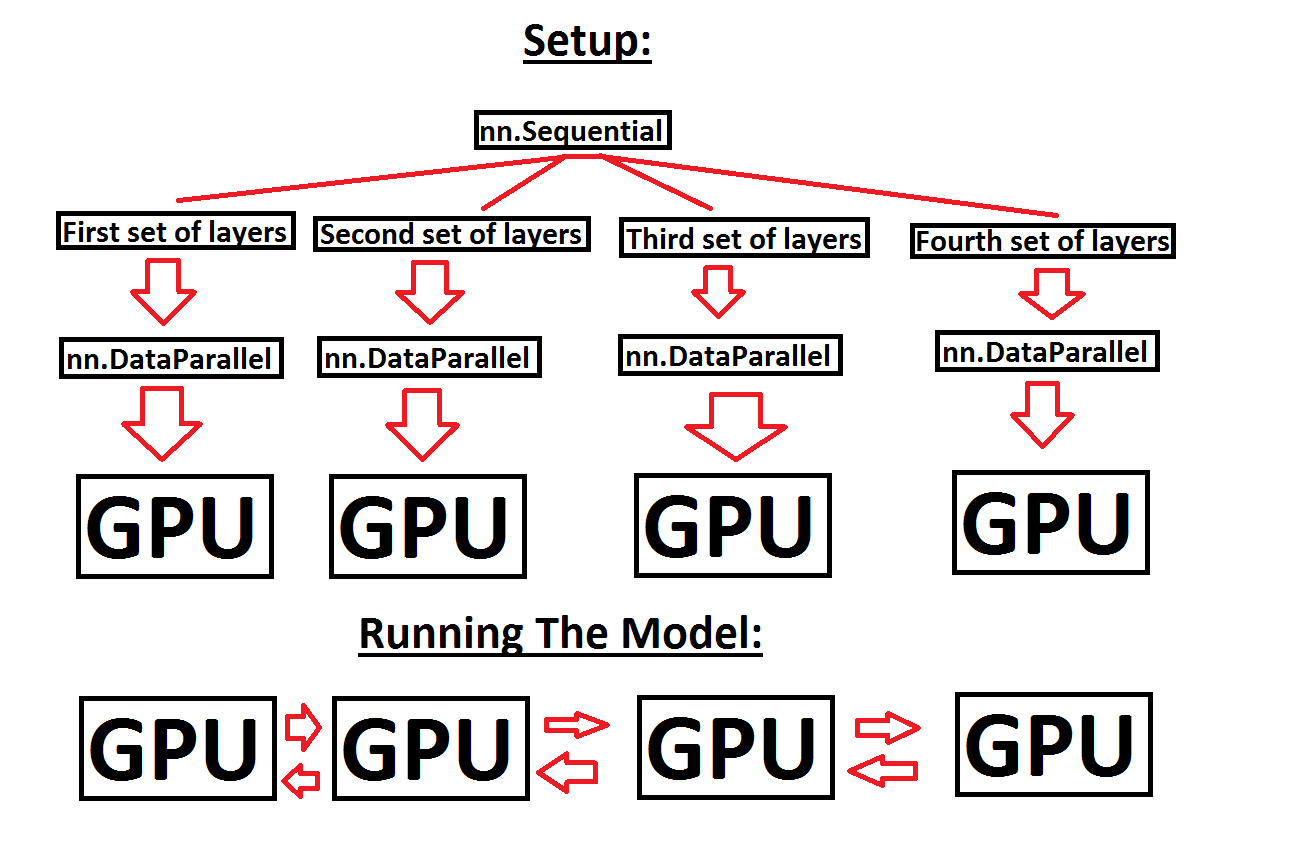

Help with running a sequential model across multiple GPUs, in order to make use of more GPU memory - PyTorch Forums

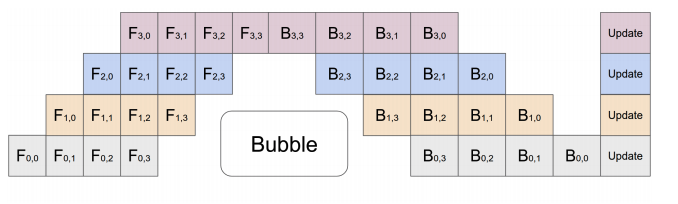

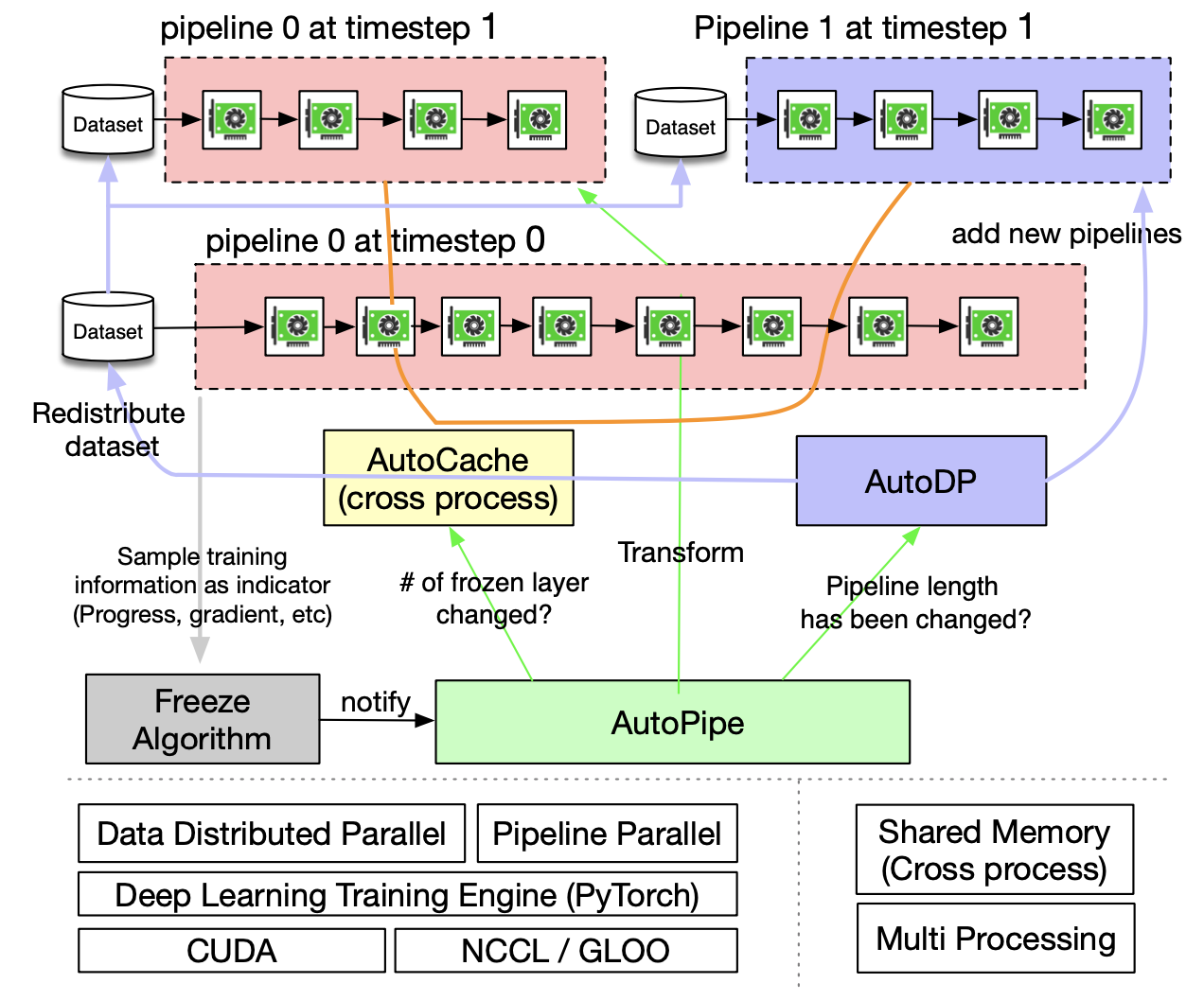

PipeTransformer: Automated Elastic Pipelining for Distributed Training of Large-scale Models | PyTorch