Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

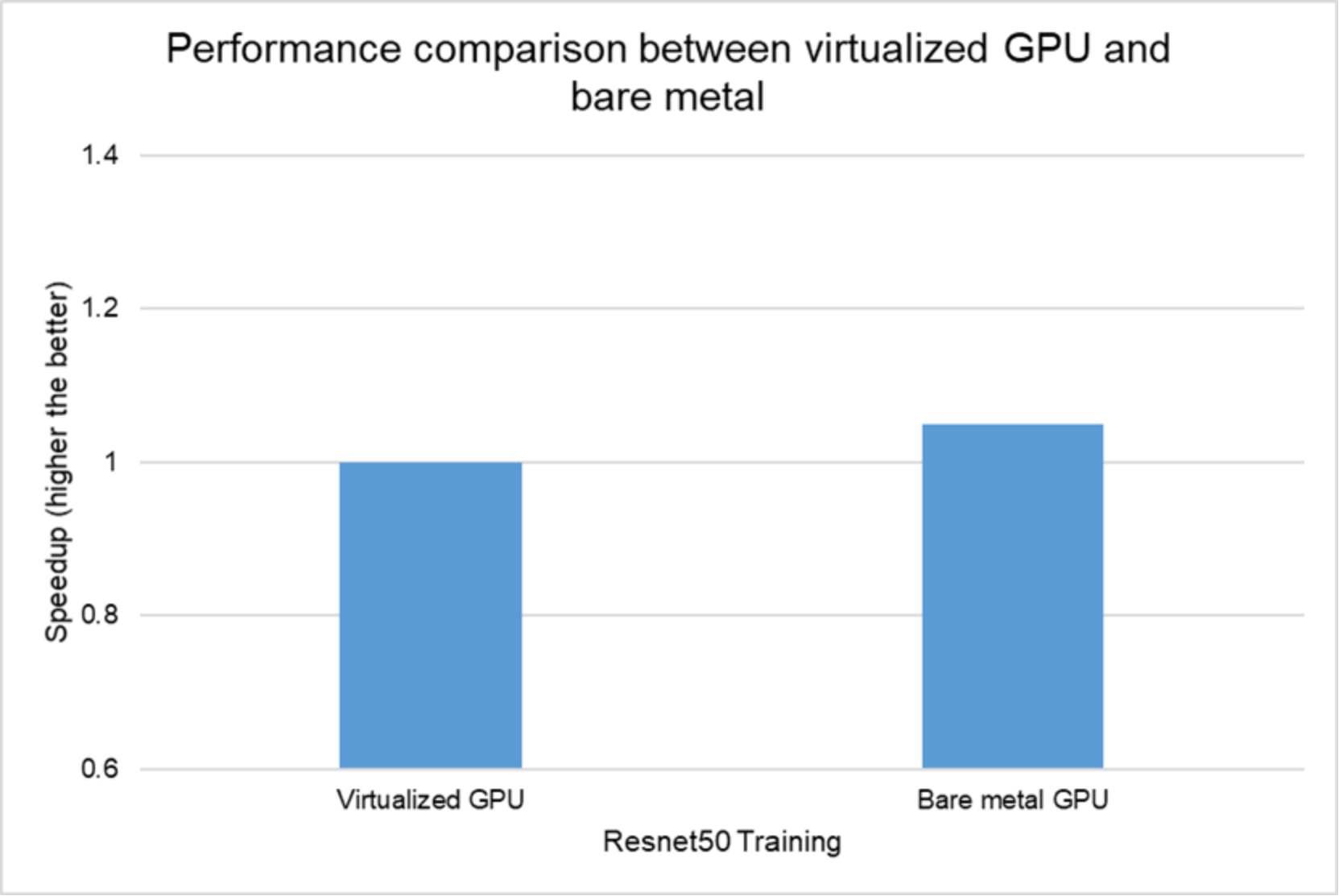

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Performance results | Design Guide—Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure | Dell Technologies Info Hub

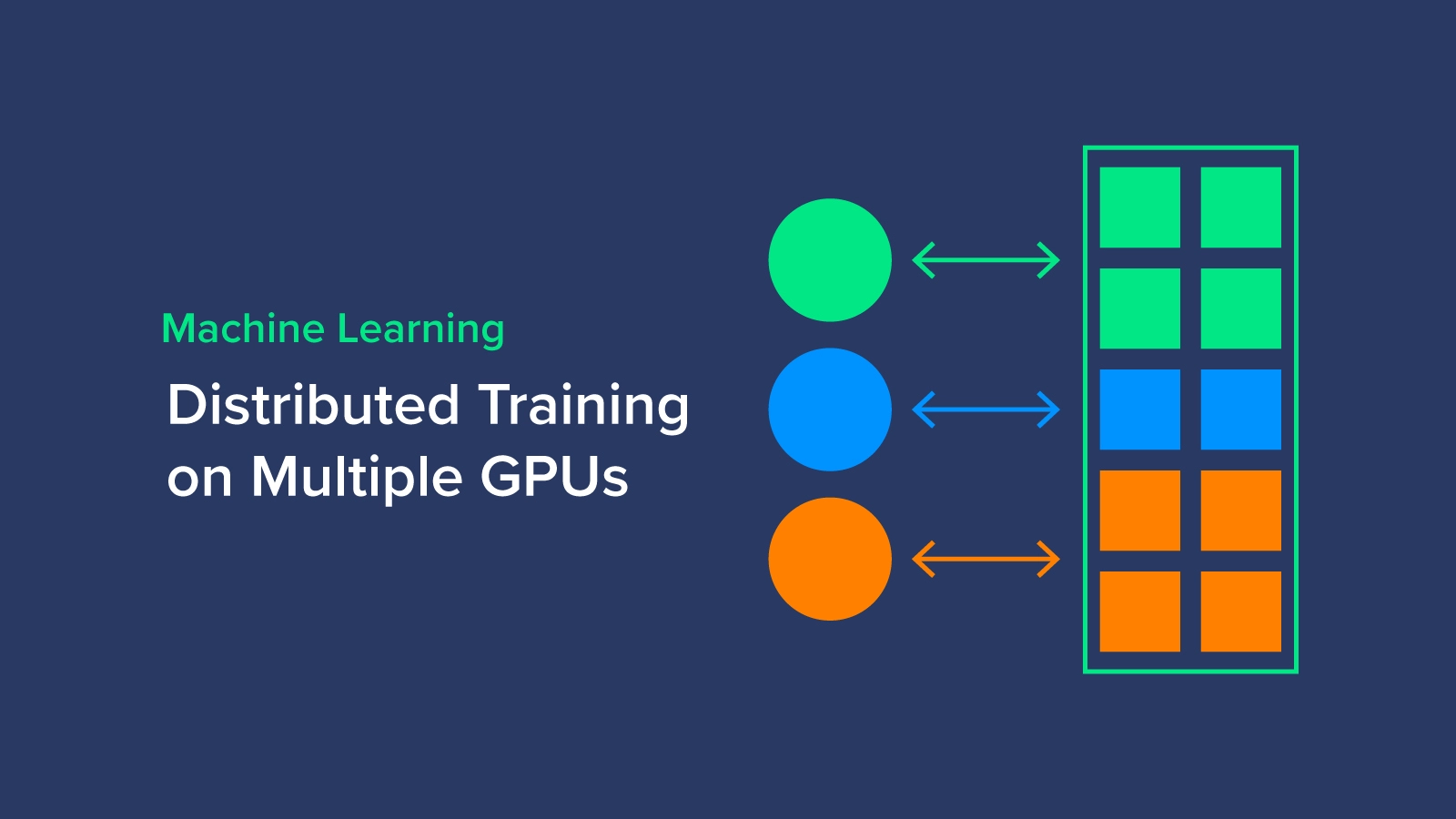

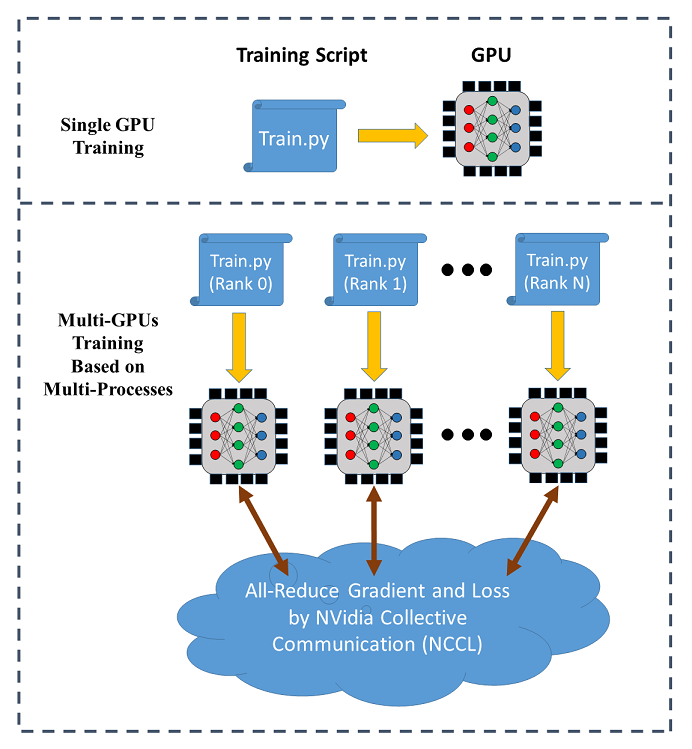

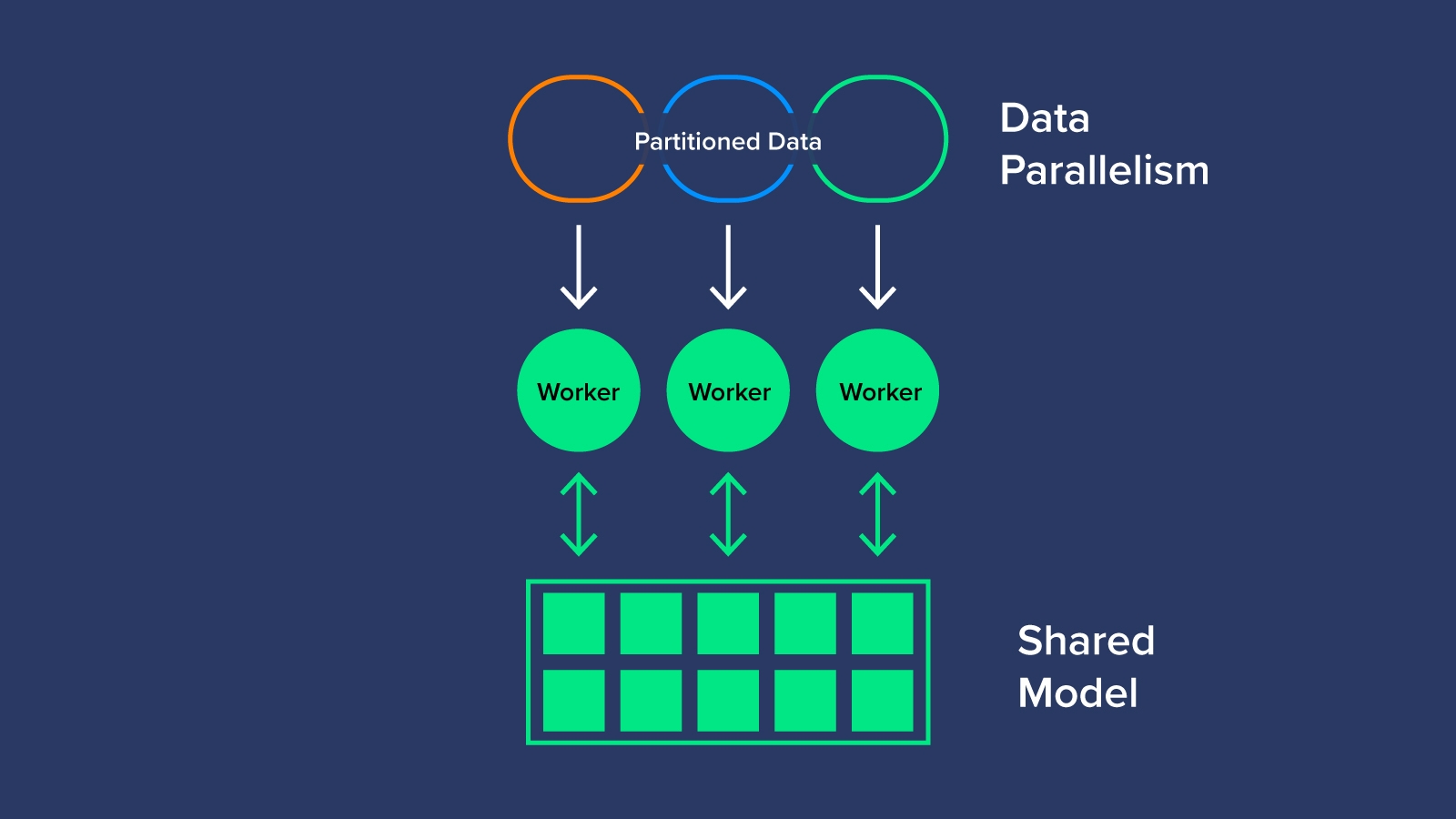

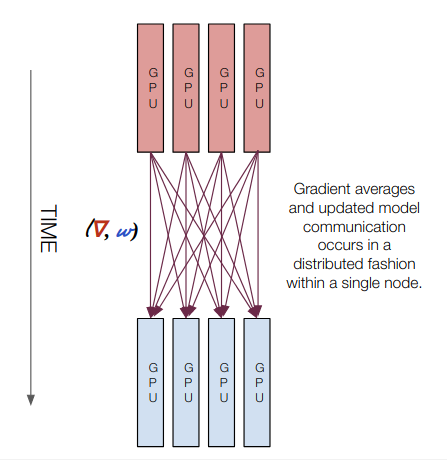

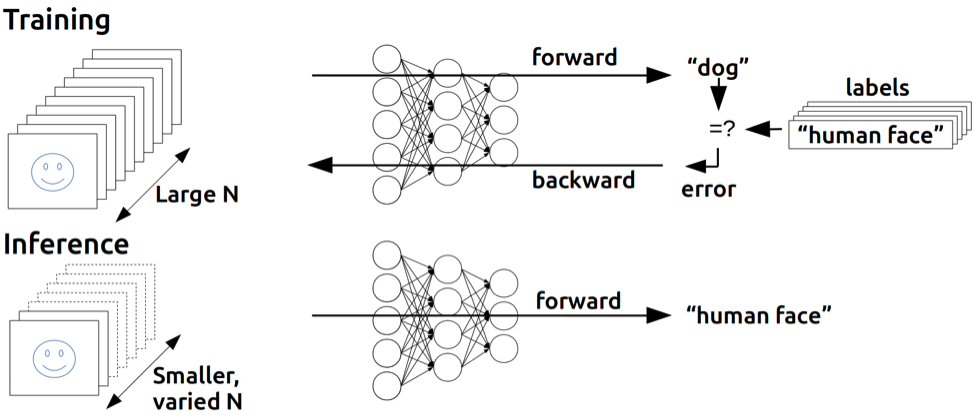

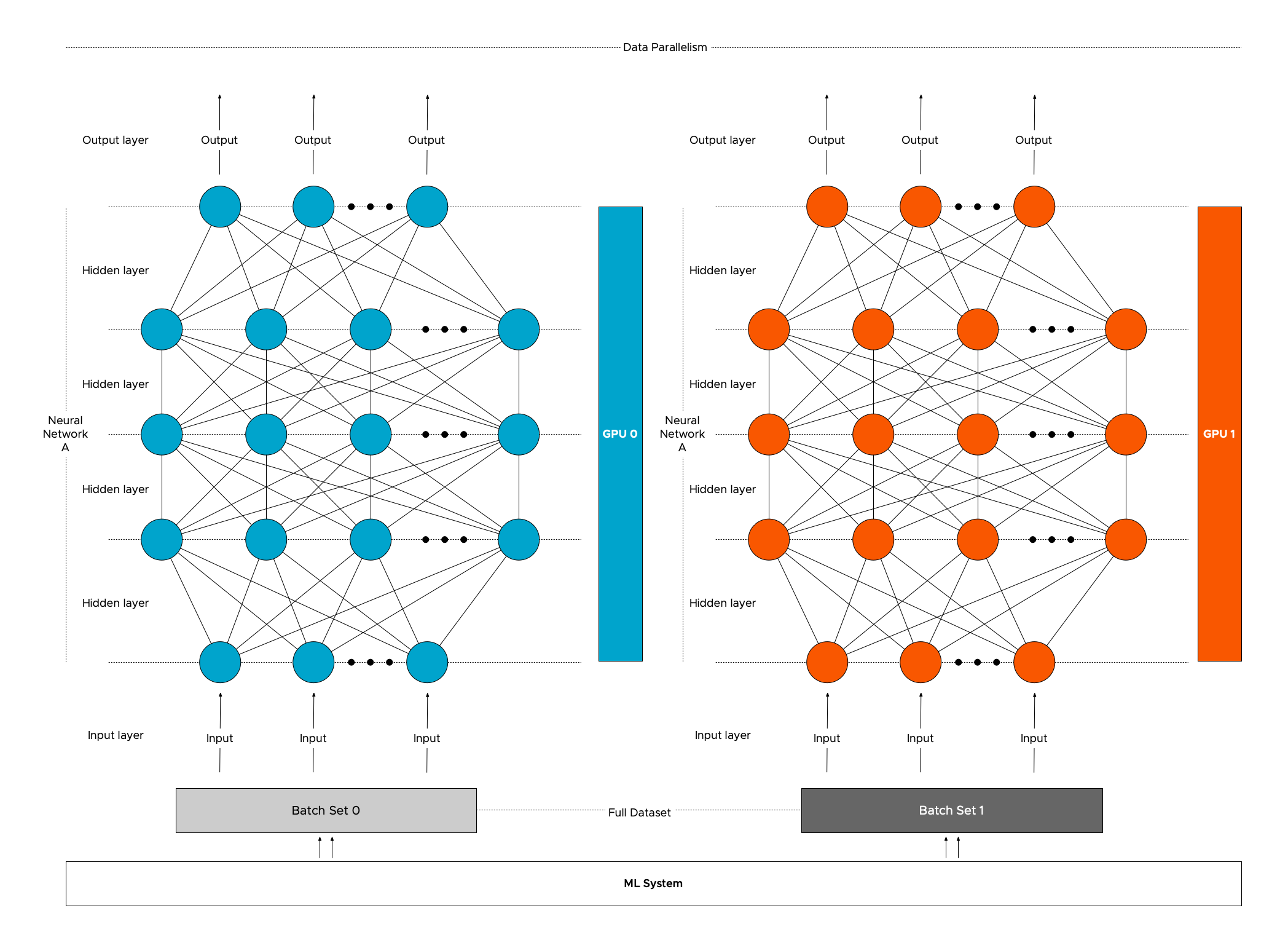

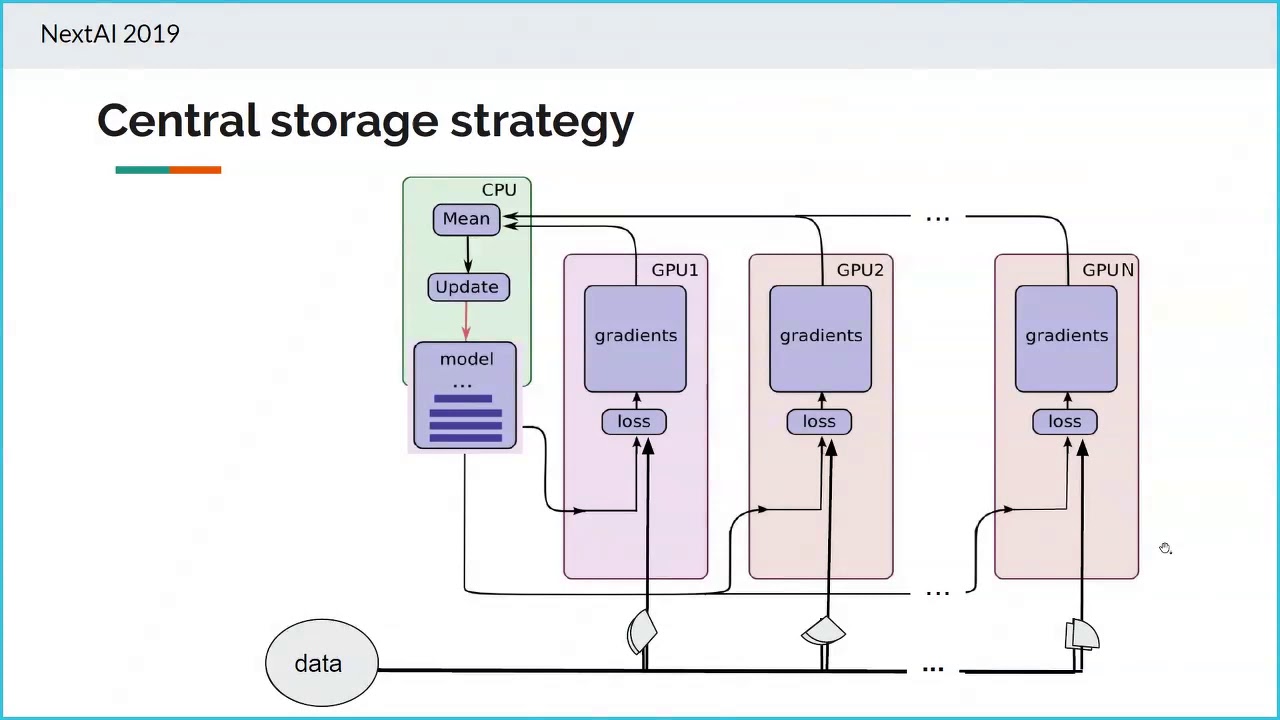

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer