6th KAUST-NVIDIA Workshop on "Accelerating Scientific Applications using GPUs" | www.hpc.kaust.edu.sa

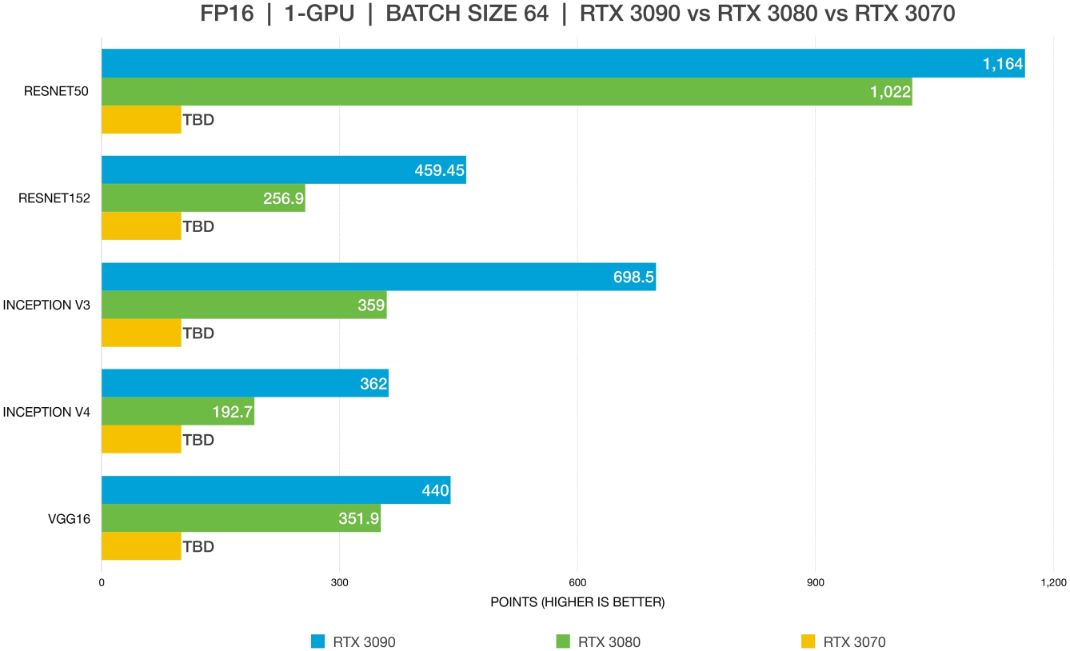

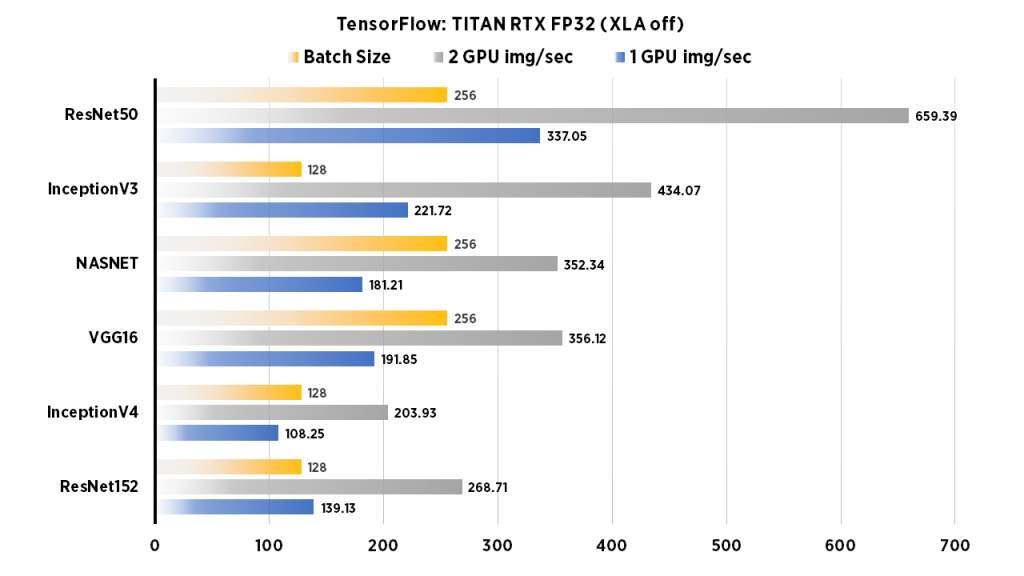

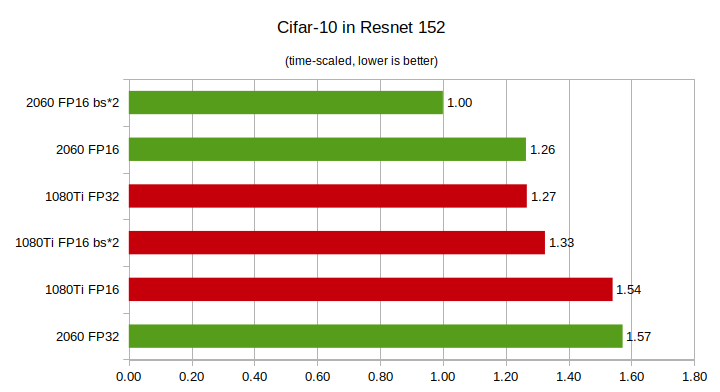

RTX 2060 Vs GTX 1080Ti Deep Learning Benchmarks: Cheapest RTX card Vs Most Expensive GTX card | by Eric Perbos-Brinck | Towards Data Science

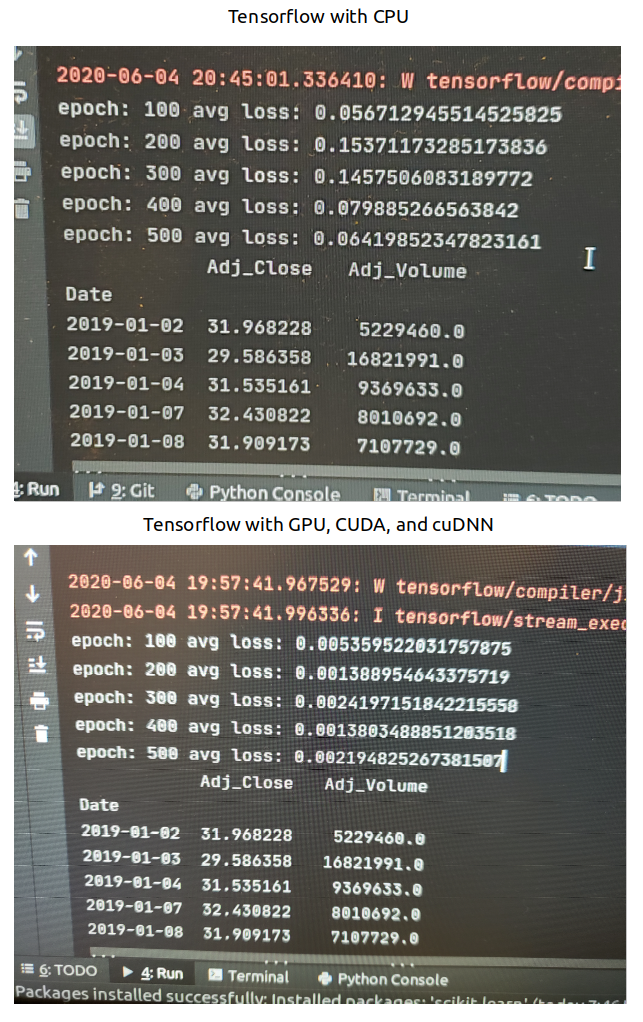

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

GTC Silicon Valley-2019: Maximizing Utilization of NVIDIA Virtual GPUs in VMware vSphere for End-to-End Machine Learning | NVIDIA Developer

Performance comparison of different GPUs and TPU for CNN, RNN and their... | Download Scientific Diagram

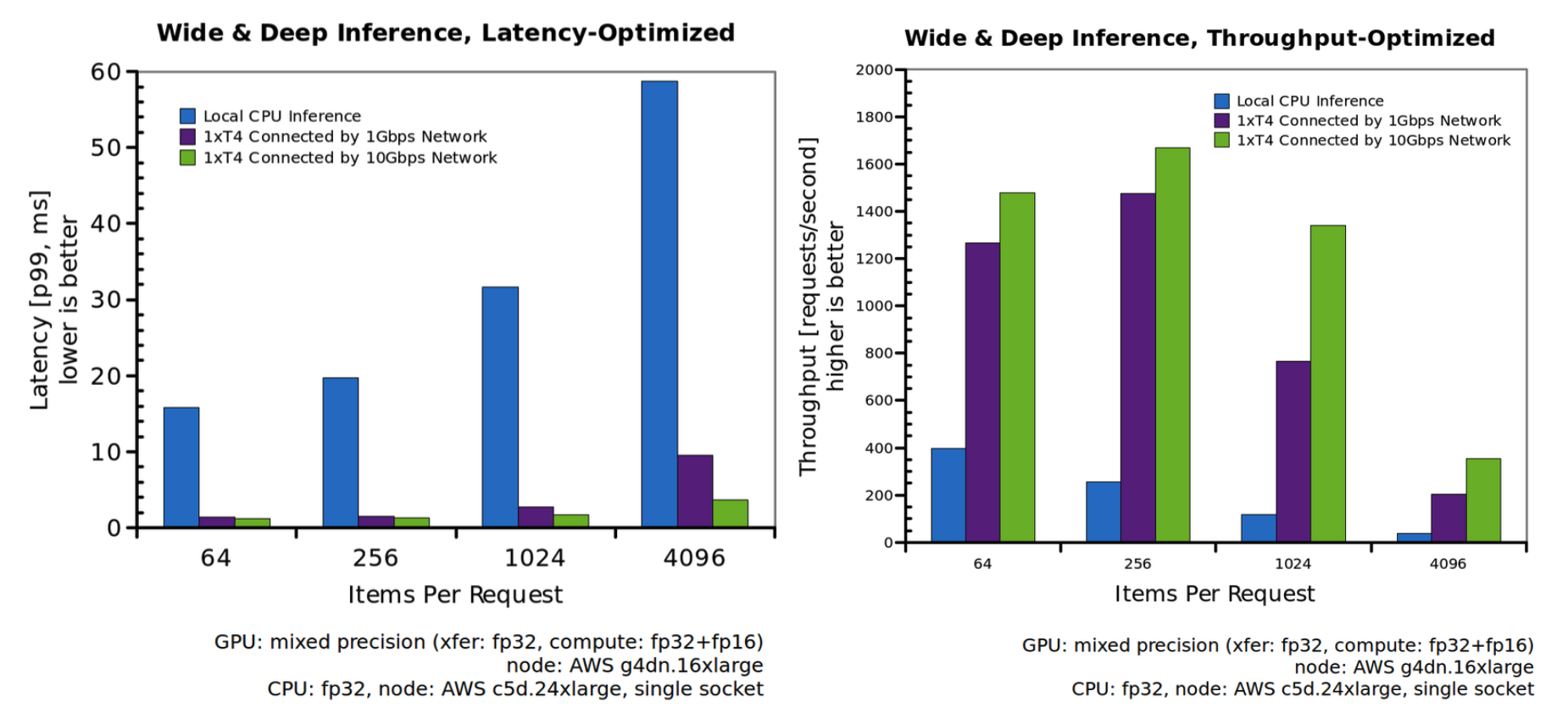

GTC-DC 2019: Accelerating Deep Learning with NVIDIA GPUs and Mellanox Interconnect - Overview | NVIDIA Developer

GTC-DC 2019: GPU-Accelerated Deep Learning for Solar Feature Recognition in NASA Images | NVIDIA Developer