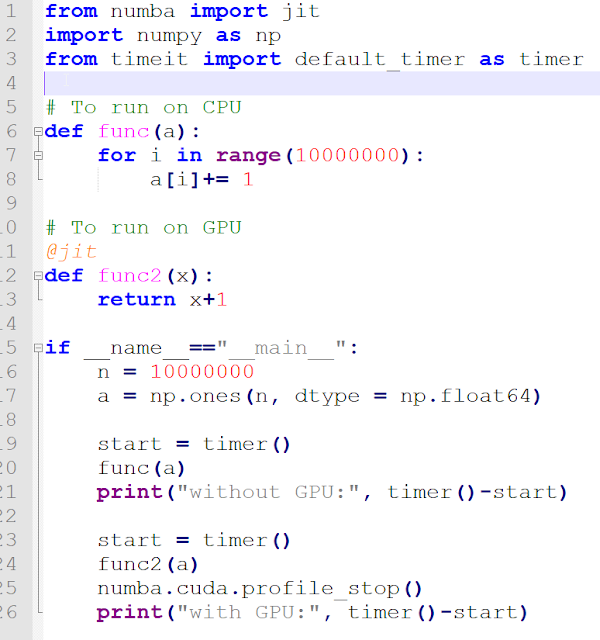

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

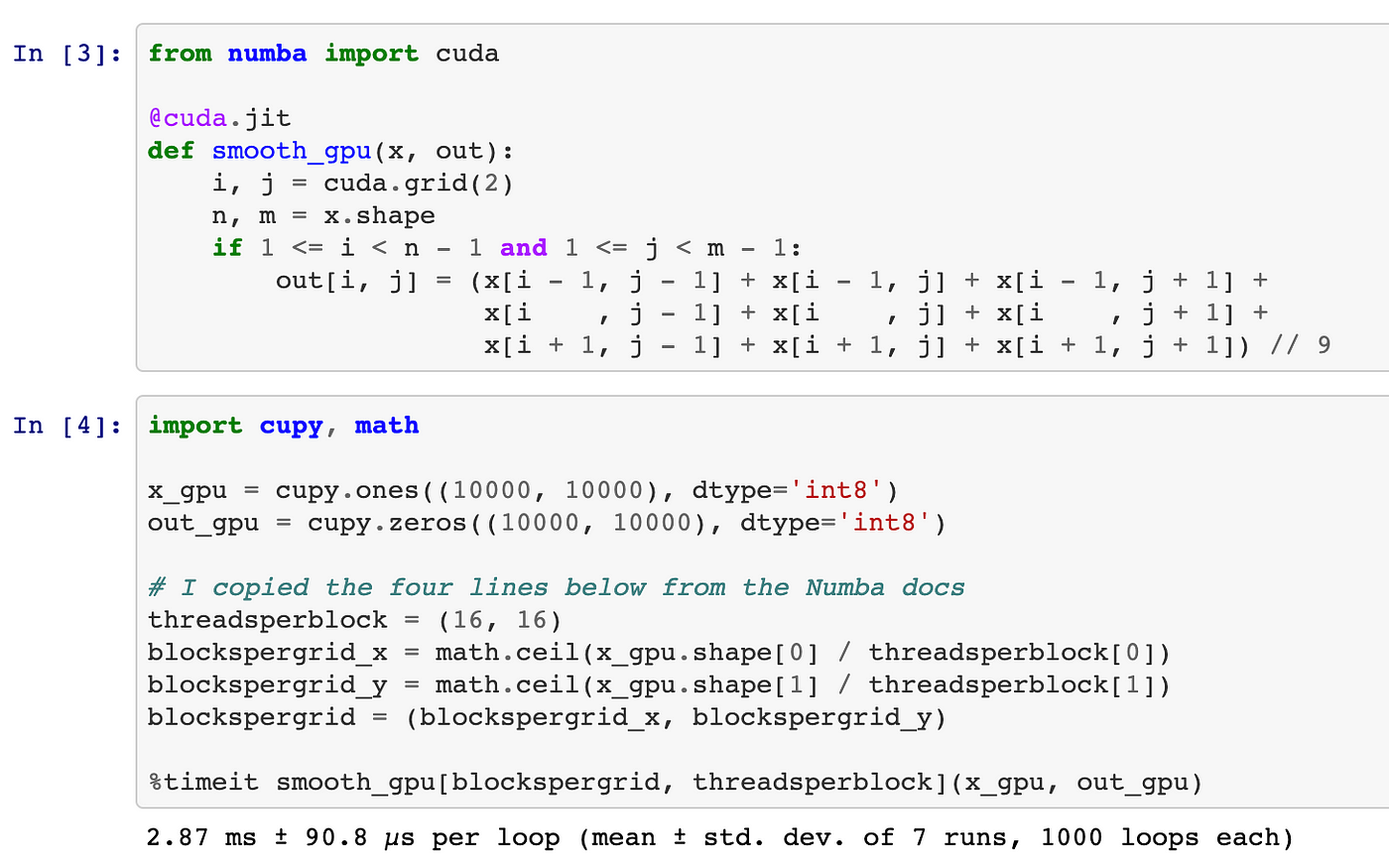

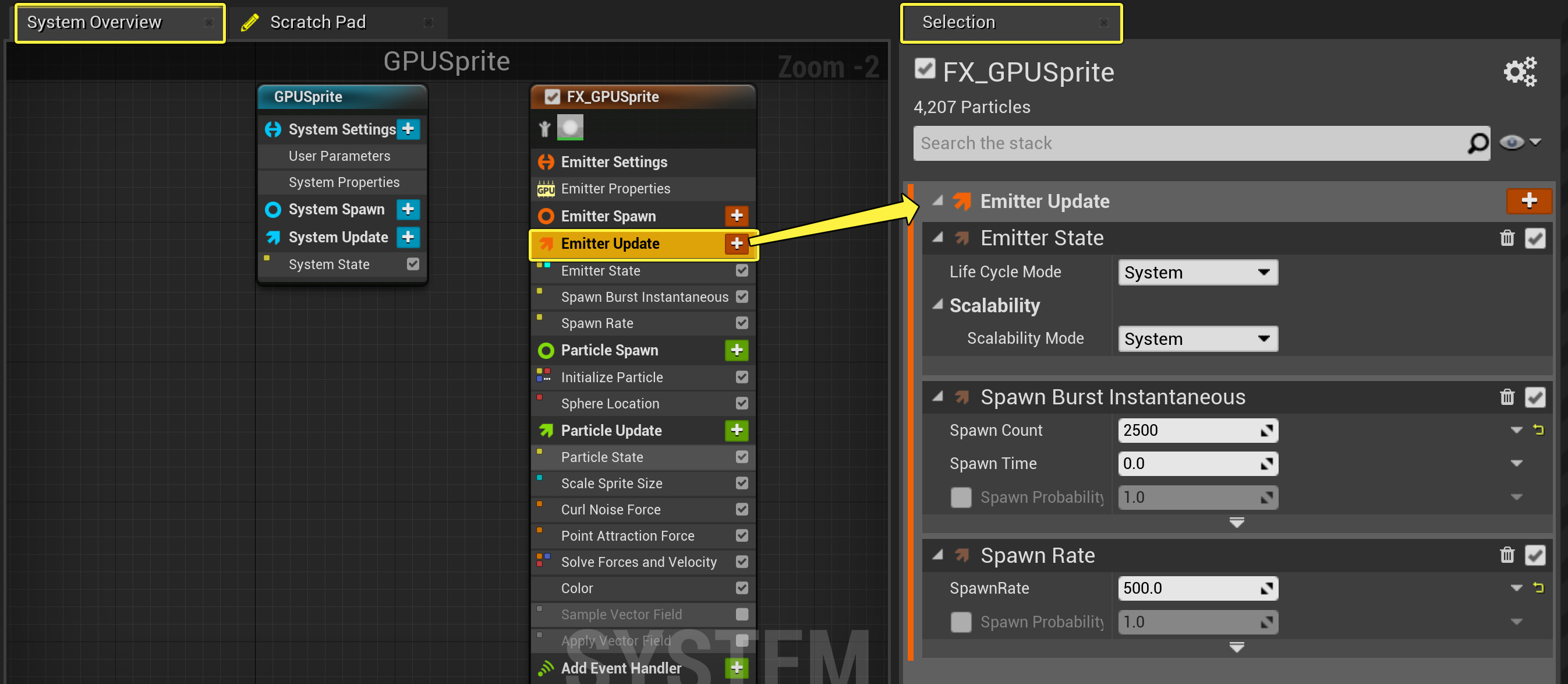

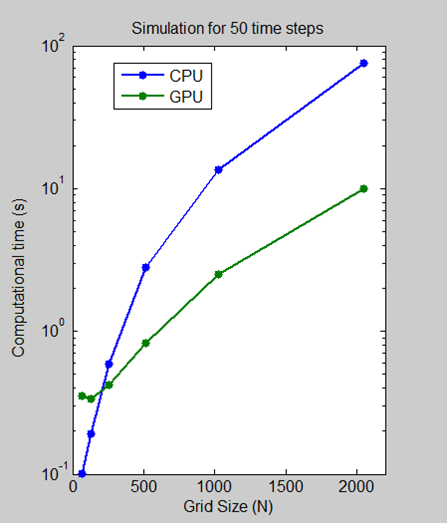

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

Force Full Usage of Dedicated VRAM instead of Shared Memory (RAM) · Issue #45 · microsoft/tensorflow-directml · GitHub

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

How to Install TensorFlow with GPU Support on Windows 10 (Without Installing CUDA) UPDATED! | Puget Systems

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

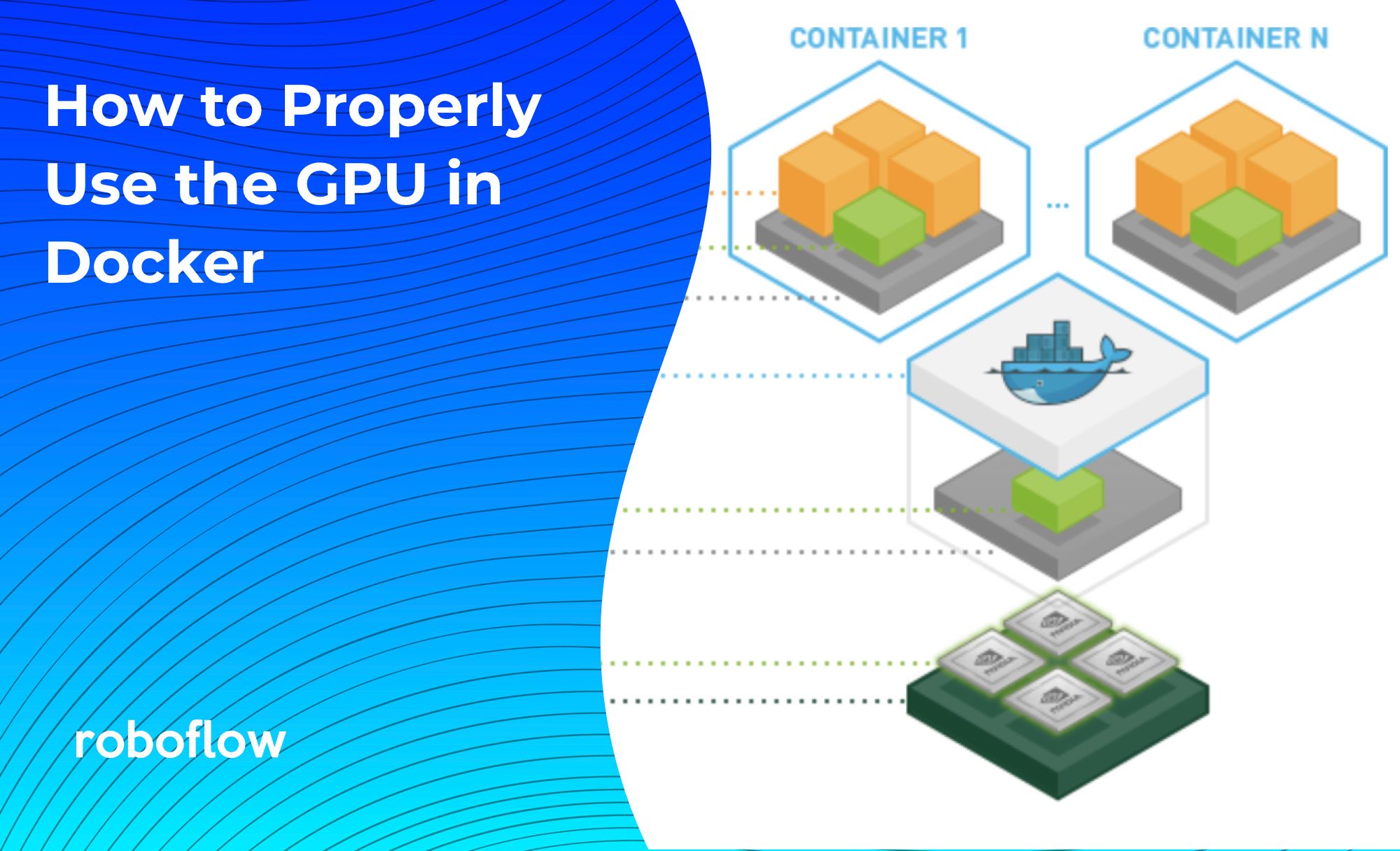

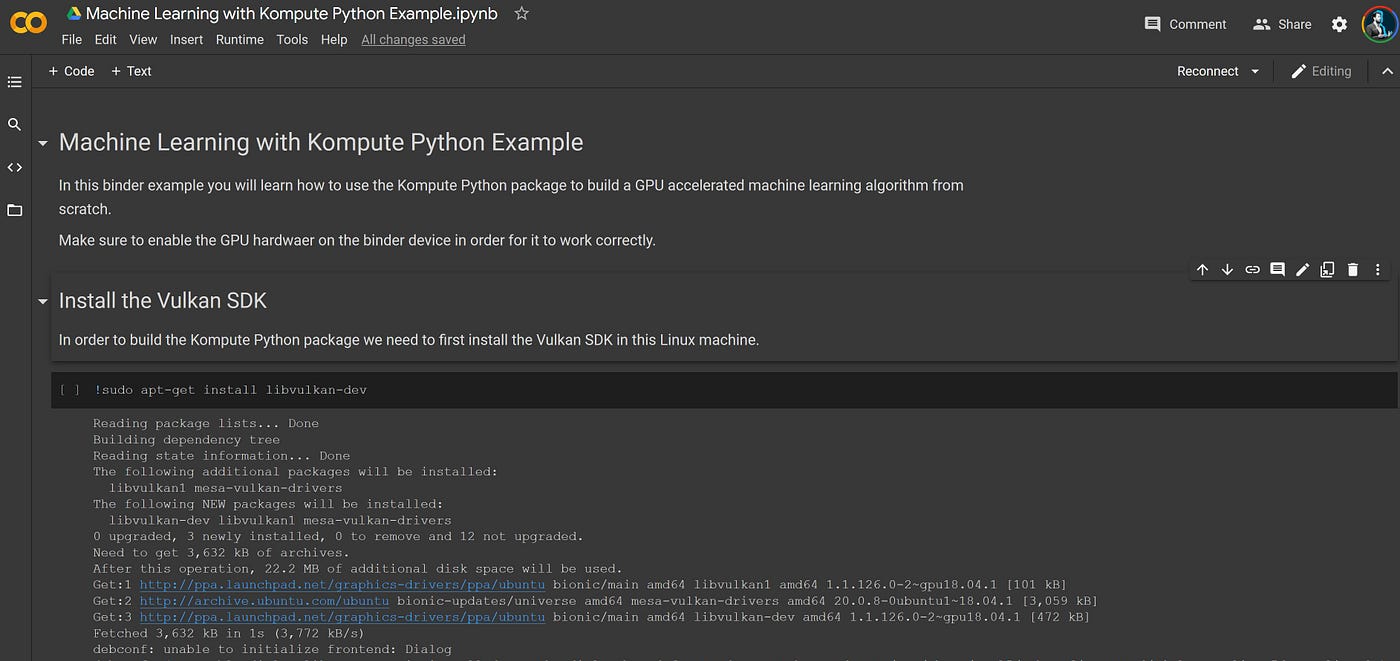

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science