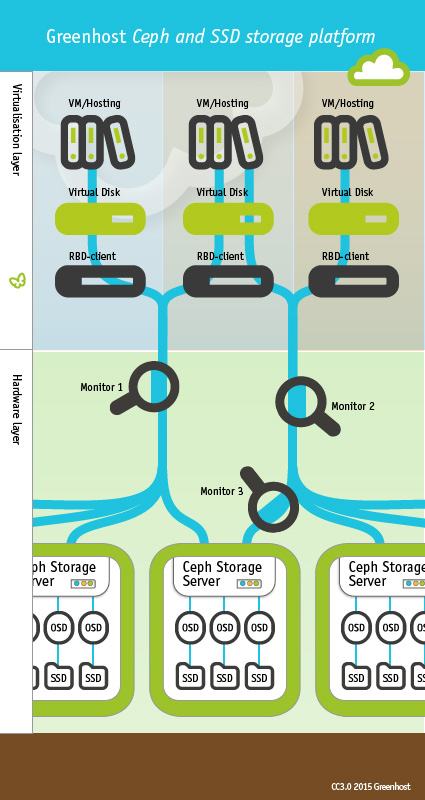

Use Cephfs y S3 para aplicaciones médicas | Proveedor de computación y almacenamiento en la nube distribuido con sede en Taiwán | integrado

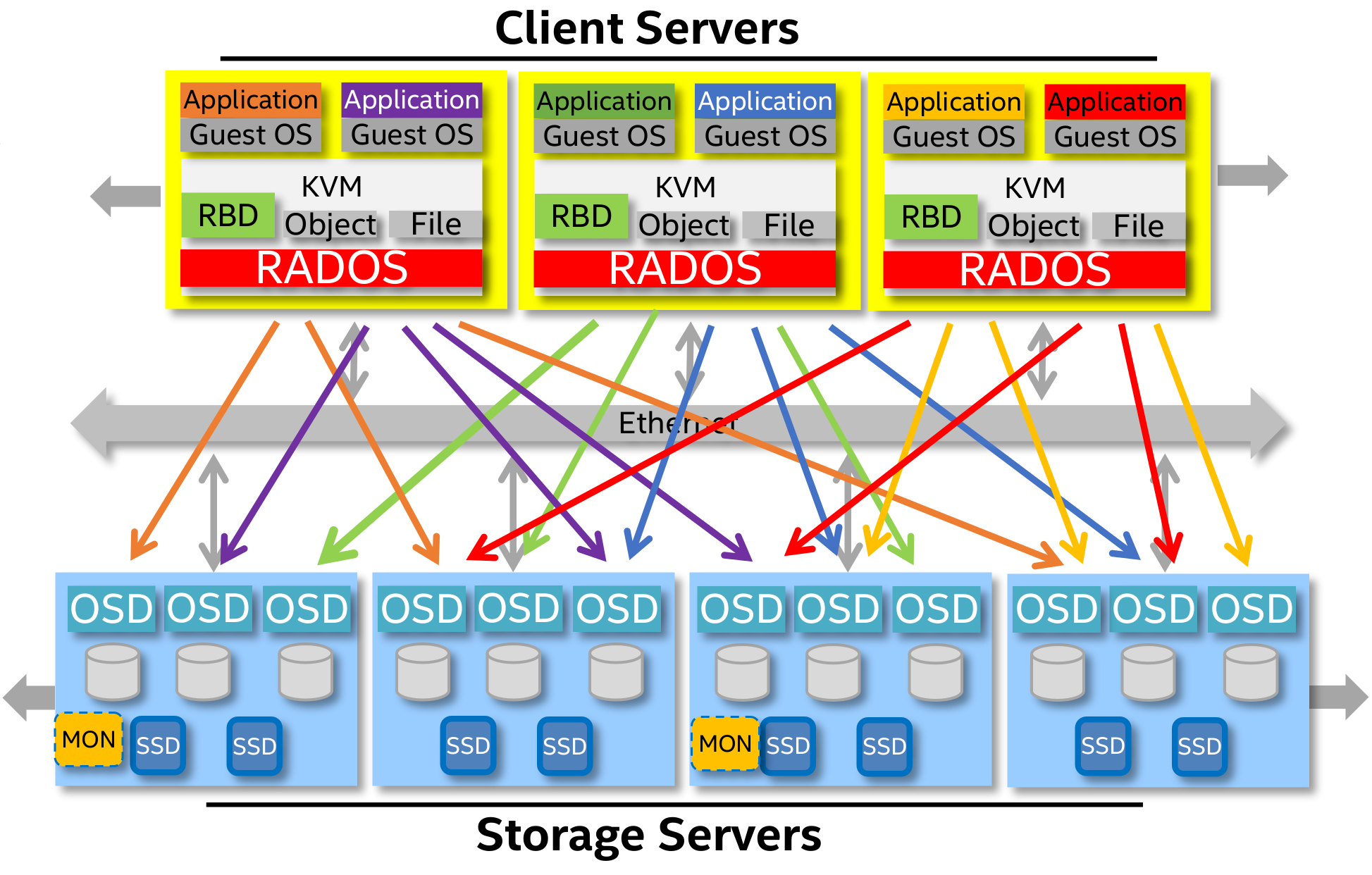

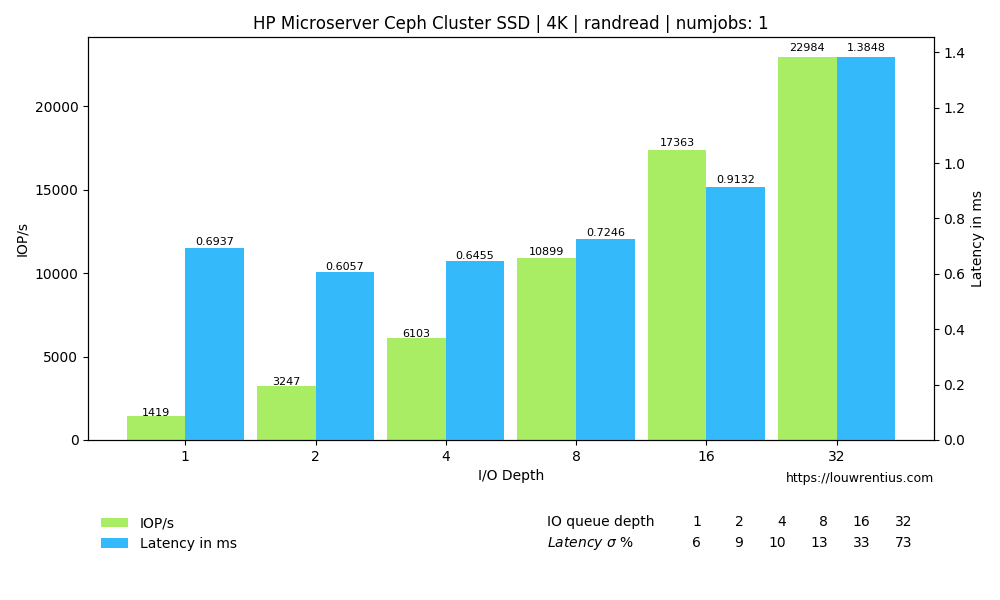

To Improve CEPH performance for VMware, Install SSDs in VMware hosts, NOT OSD hosts. - VirtunetSystems

To Improve CEPH performance for VMware, Install SSDs in VMware hosts, NOT OSD hosts. - VirtunetSystems

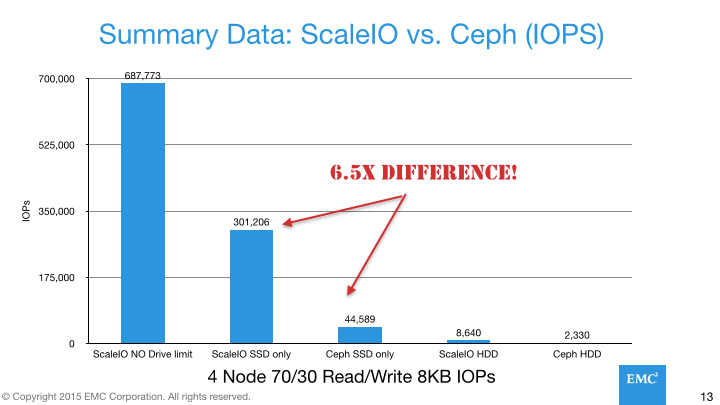

Killing the Storage Unicorn: Purpose-Built ScaleIO Spanks Multi-Purpose Ceph on Performance - CloudAve