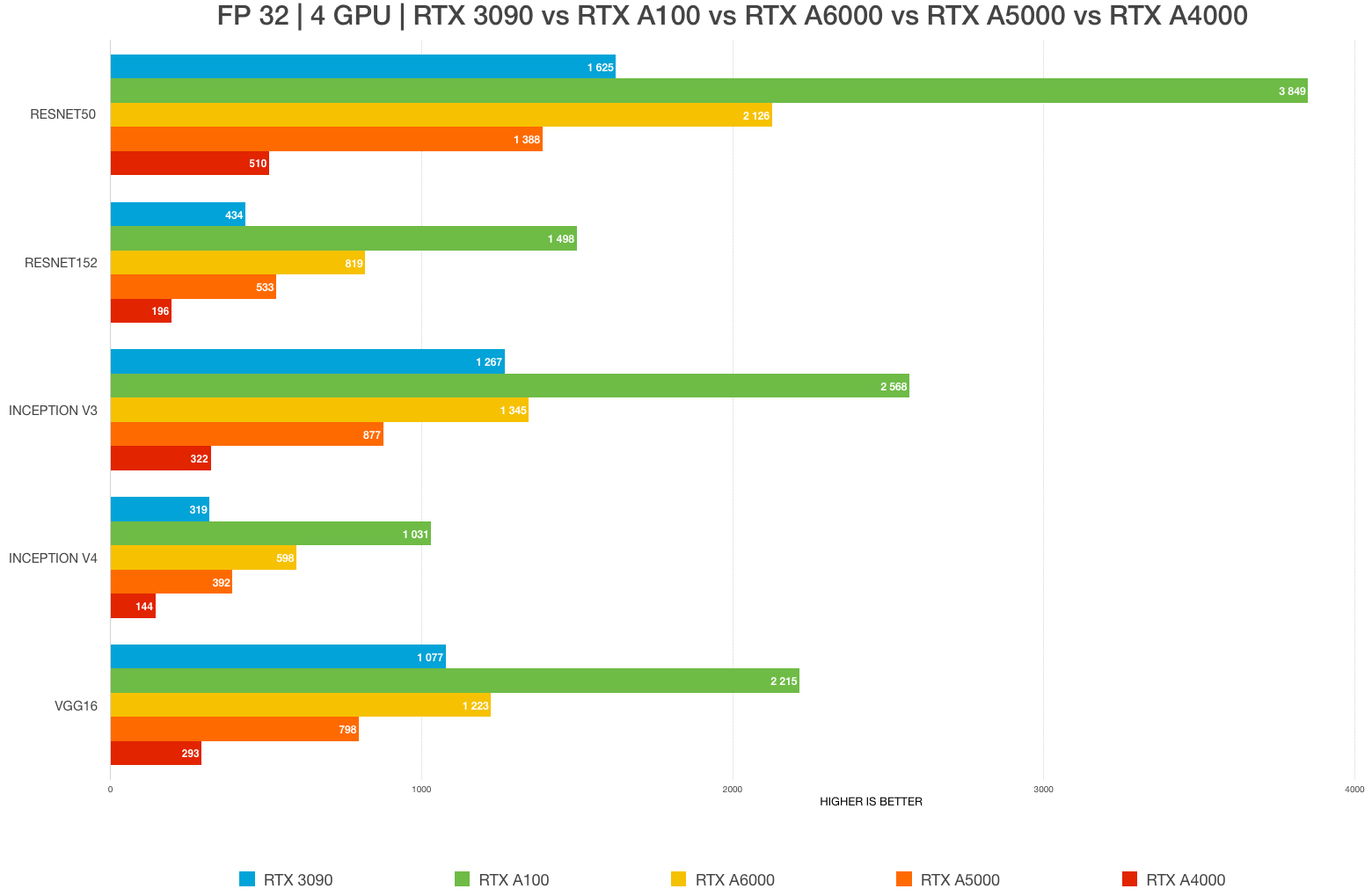

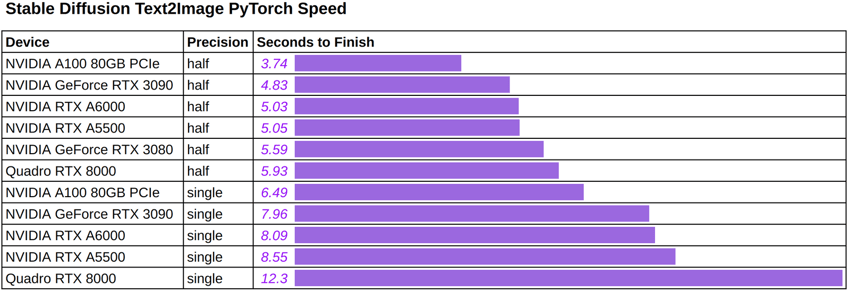

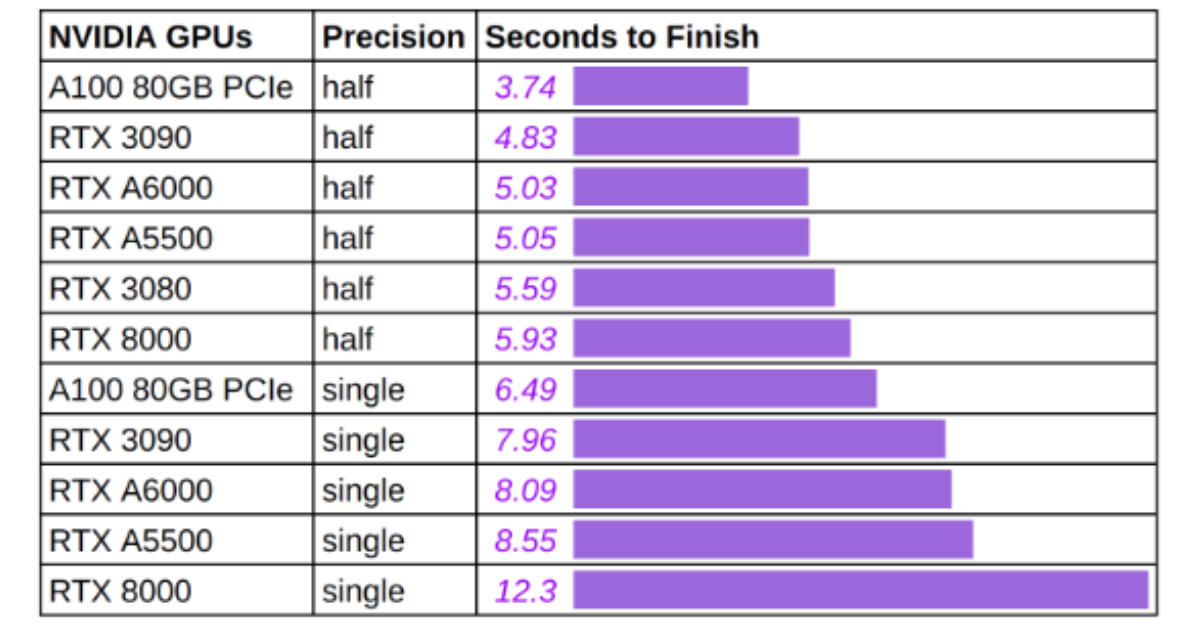

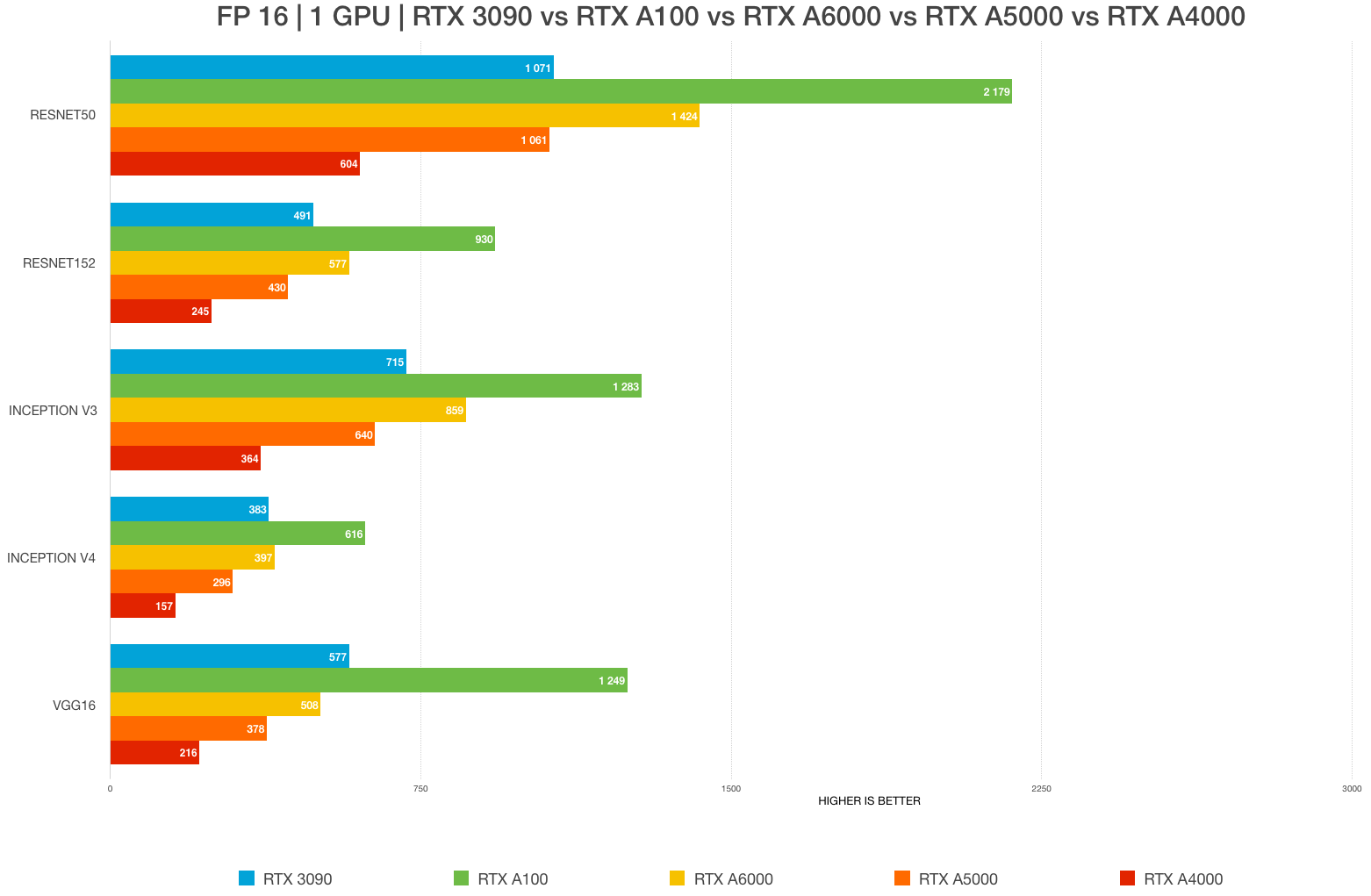

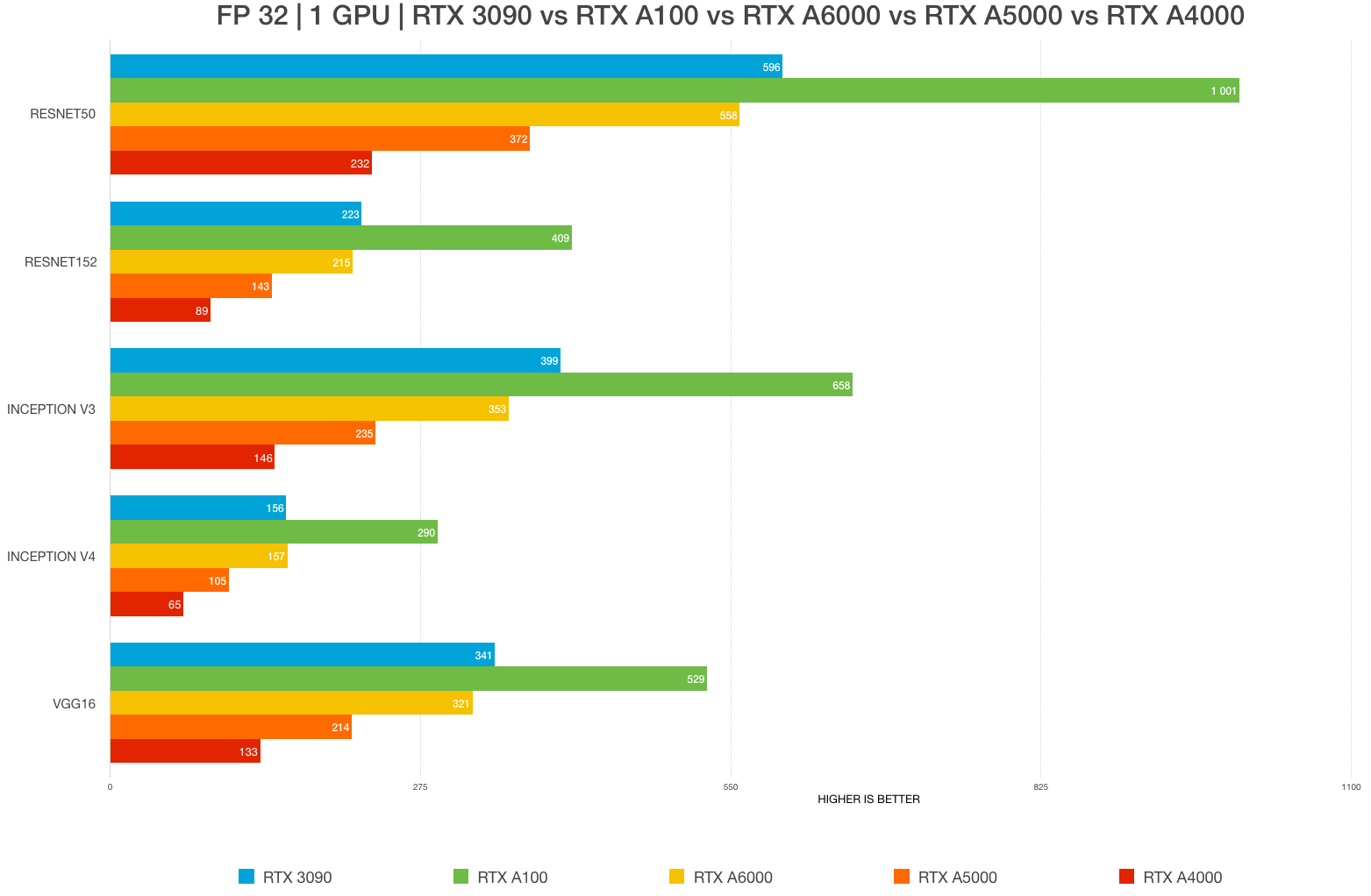

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

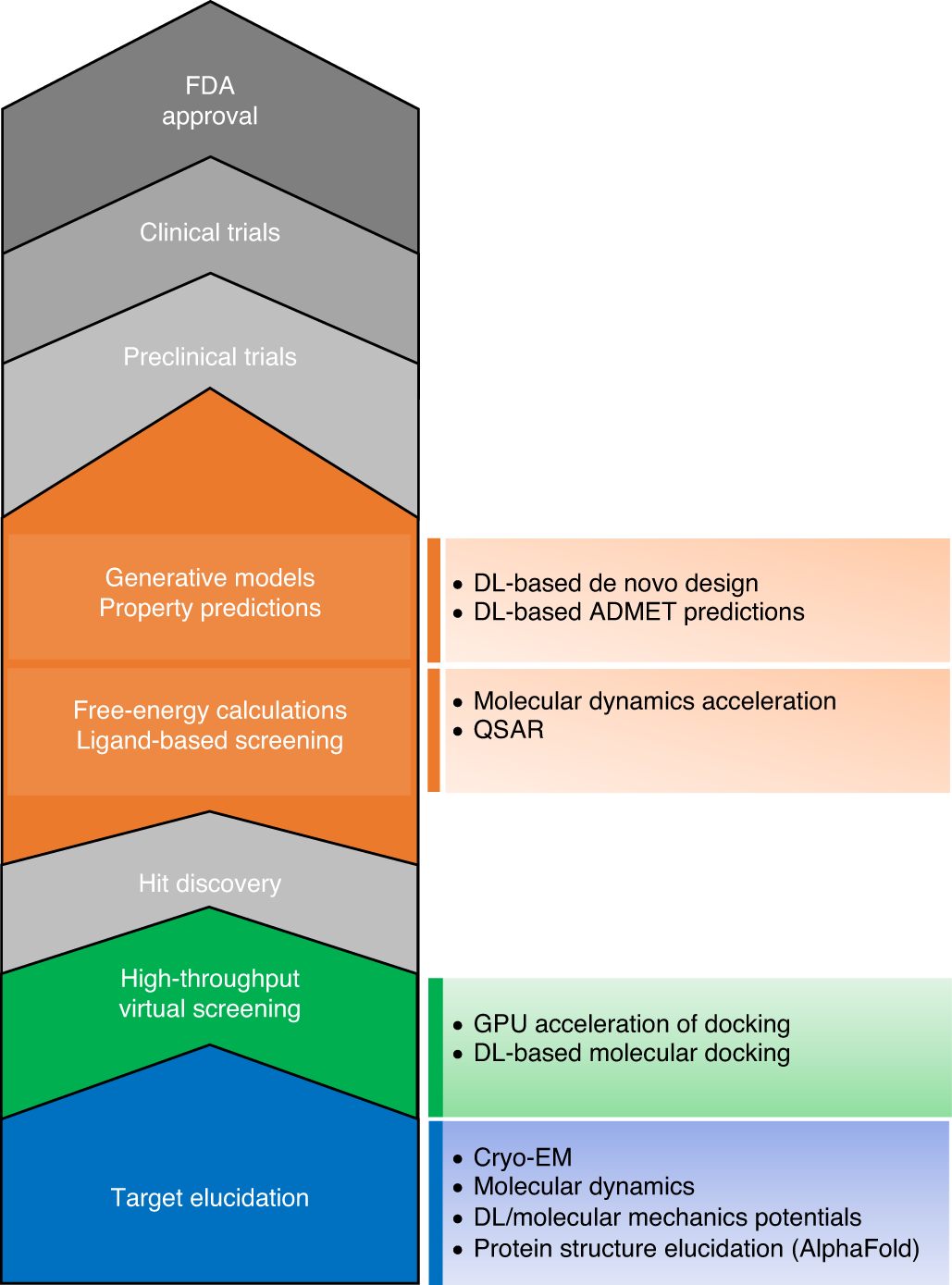

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

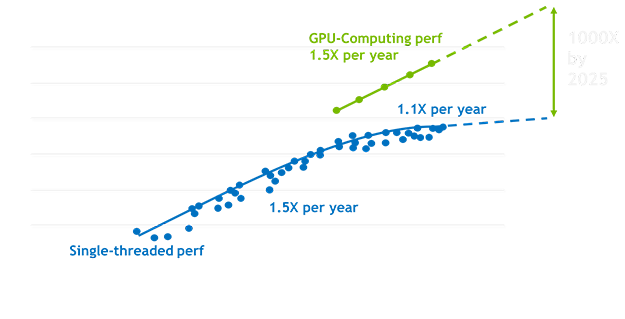

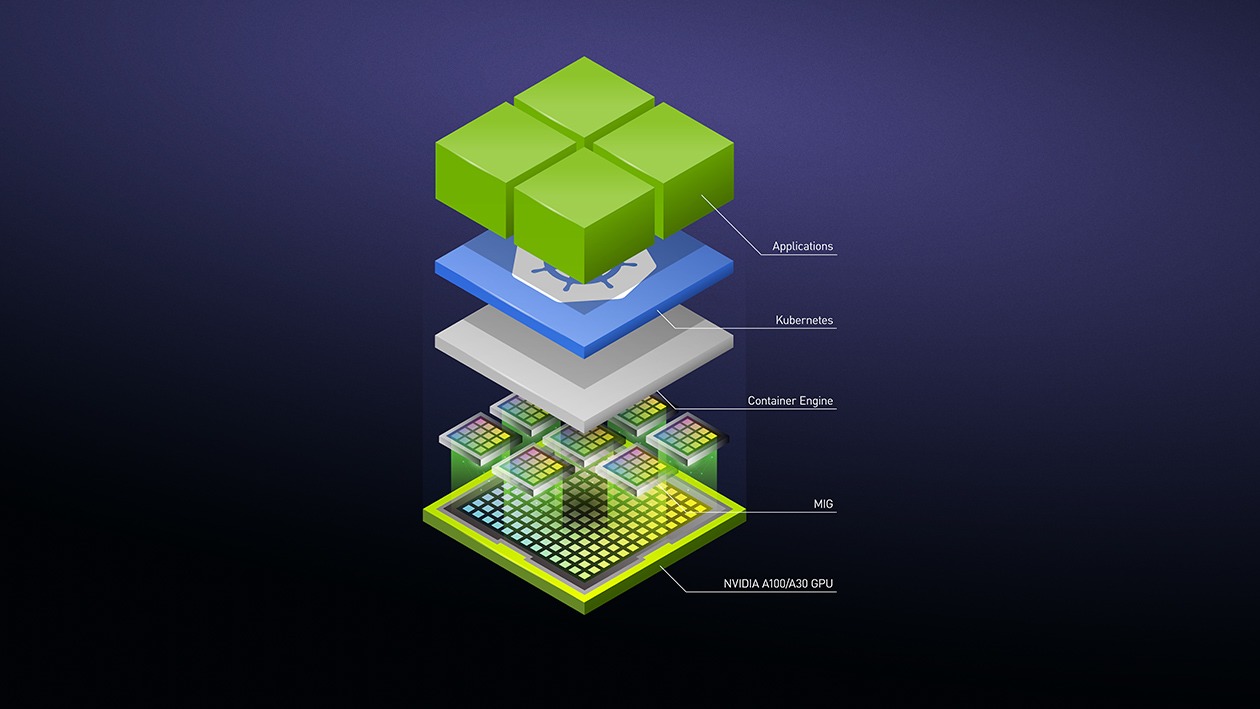

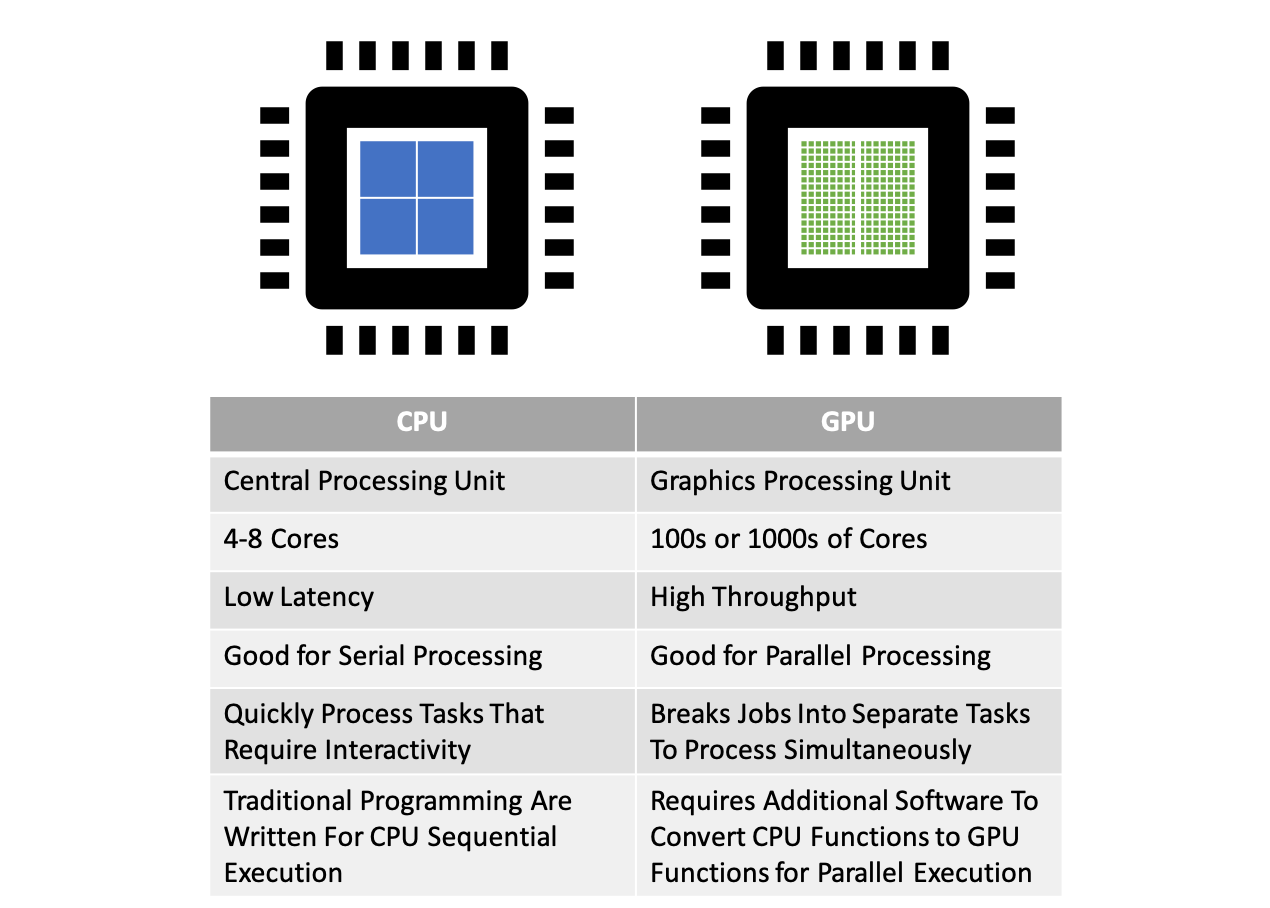

Parallel Computing — Upgrade Your Data Science with GPU Computing | by Kevin C Lee | Towards Data Science

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

How GPU Computing literally saved me at work? | by Abhishek Mungoli | Walmart Global Tech Blog | Medium